Personal Blogs

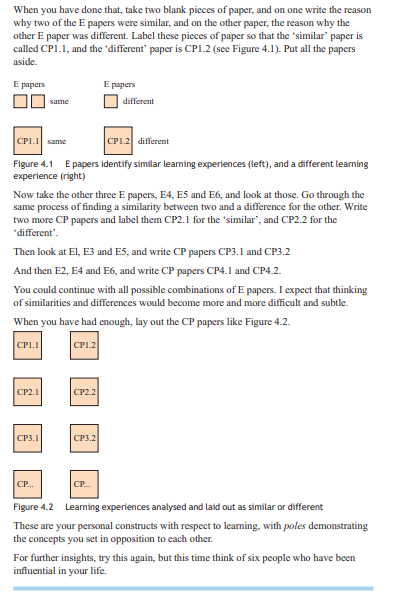

Mary Follett (1868-1935), classified three ways of dealing with conflict.

- Domination - The imposition of one party's view on all the other parties.

- Compromise - Often a degraded outcome, the best that can be agreed by all conflicting views.

- Integration - An outcome where all desires have found a place.

Peter Checkland, uses two terms.

- Consensus - Where all parties agree

- Accommodation - Where all partiews

I prefer to view the terms Consensus and Accommodation to mean the following.

- Consensus - All parties vote on a set of options and the majority choice is selected. Subtly different to compromise, in that a consensus will leave a range of outcomes. Some people may be dissatisfied, others satisfied.

- Accommodation - Slightly broader than integration, in that is seeks a 'good' outcome for all, rather than the optimal outcome. integration is where everyone gets their way, whereas accommodation has everyone getting a result they can be happy with.

Additional Conflict Resolution Outcomes

- Indoctrination - Where parties believe they have what they want, but unaware that they would have chosen differently had their thinking not been influenced by indoctrination.

Dynamics of Conflict

Irrespective of which definitions are preferred, or how they are understood, the dynamics of conflict will always be present. The environment is always changing. Problematic situations will always be in flux. Consequently, conflict will never be solved indefinitely. Nature has to have oscillation between bi-polar points on a spectrum. This means that even if a consensus is reached, humans will begin to forget the original problem their ancestors solved.

"If forgotten, history is doomed to repeat itself."

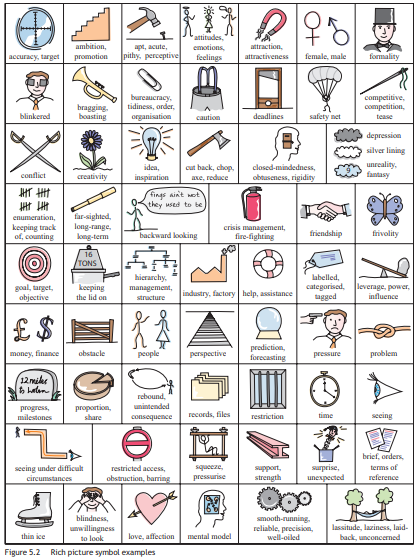

We all hold values and beliefs which govern how we chose to complete our day-jobs. However, sometimes the consequences of our actions do not produce the results we had expected. In these situations, there has been a mismatch between our intended actions and our actually results. When mismatch occurs, we look for new ways of doing things so that this undesirable consequence doesn't repeat itself. This type of learning behavior is defined as 'single-loop' learning.

Single-loop learning changes how we execute our daily tasks, based on prior performance outcomes. What single-loop learning doesn't do is alter our values and beliefs governing how we should act. Only double-loop learning will shift these governing variables. The power of double-loop learning is that it can free individuals and organisations from accepting excuses for less-than desirable performance. Below is a real-life example of double-loop learning in action.

Supermarket Merchandising; A Product Feature Problem

- Governing Values.

- Product features must always be full of merchandise and presentable at all times.

- No stock is to be left unaccounted for. (The store's inventory computers must have a record of where everything is).

- No merchandise can be 'plugged'. (Plugging is when product in forced into a gap that belongs to another product).

- No employee overtime is ever allowed.

- Associates are not allowed to order product. (Computers must automatically re-order when product is sold).

- Actions

- An Associate has only 40 mins left in his shift. He notices a product feature is nearly empty. There's lots of gaps on the shelving and there's not enough product left to make this feature look presentable. He therefore decides to dismantle this feature, and replace it with a different product that he has plenty of in the back storeroom. However, the remaining off-coming merchandise now needs to be given a new location. Unfortunately by this point, the Associate has ran-out of work hours must clock-off. He is not (under any circumstances) allowed to work overtime. Consequently, he has no time to properly scan these off-coming items into the back storeroom. He sees a space on a nearby shelf, which normally holds a different product that the store has currently sold-out of. He therefore decides to 'plug' this off-coming product into that gap. He knows plugging is not allowed, but he has ran out of time and see little alternative.

- Consequence

- The Associate ran-out of time to complete his tasks correctly (according to the stores governing values). The consequence of this, is that the Associate decided to plug merchandise into a location temporarily. When the Store Manager later questions why this product is in the wrong locations, the Associate present a defensive justification for his actions. Despite the Store Manager being annoyed, the Associate quotes store's policies on 'overtime' and 'unaccounted' stock, and uses this in his defense against his Store Manager. Both the Associate and the Store Manager ends this discussion equally frustrated with each other.

- Single-loop Learning (Actually Outcome)

- To prevent the risk of upsetting his Store Manager with the same problem in the future, this Associate learns that he should not attempt to fix the product features when he has less than 1 hour left in his shift. The Associate realizes that had he done nothing, and left this feature looking empty and unfilled, he would have not been blamed. The Store Manager would still have noticed the feature being empty, but would not have been able to attribute blame on the Associate directly (as everyone is responsible for maintain the product features). The Associate would have been able to hide his strategy behind the guise of 'collective responsibility'.

- Double-loop Learning (Lost-Opportunity)

Had the Store Manager and Associate discussed the problem openly, using a double-loop learning perspective, the could have made systemic improvements to their 'governing values'. Both the Associate and the Store Manager could have altered their 'governing values' towards over-ordering. Going forward, the Associate could adopt a new daily routine. Each morning he would take 5 mins to scan every feature, and order replacement merchandise well in advance. The Store Manager would agree to confirm the additional stock orders. Ordering new merchandise well in advance is a very quick process. Checking daily, would ensure the Associate is never placed in the same predicament. It will also ensure all features remain full and presentable, in a much more time-efficient manner.

Had both the Associate and the Store Manager discussed this problem from a double-loop learning perspective, they may found other solutions that involve altering their 'governing values'. Instead the Associate adopted his new strategies of not caring about product features (deferring blame), when he has less than 1 hour left on the clock.- What's the Difference?

Surely, the Associate has just altered his 'actions' with a more effective 'single-loop' solution.

A key difference is that 'governing values' are socially accepted constructs, devised through collective agreement between multiple stakeholders and perspectives. Ordering new product would not have been socially agreeable according to the old governing values, as the Store Manager originally did not want Associates ordering new product manually. This 'double-loop' solution requires the entire Store adopting an systemic change in viewpoint to their ordering strategy. The 'single-loop' solution did not alter the systemic strategy, it only alter the execution of the existing systemic strategy.

An example of a cognitive map

An example of a causal-loop diagram

Cognitive maps and causal-loop diagrams may appear very similar, however there are subtle differences. The significance of these differences can affect the strategies adopted.

- Cognitive mapping focuses on the thinker, whereas causal-loop diagrams focuses on the situation.

- Causal-loop diagrams attempts to model actual causal interconnections within a situation. Cognitive maps attempts to represent peoples perceptions and feelings.

- Causal-loop diagrams attempts to answer what is happening, whereas Cognitive mapping focus more on how the perceivers feel about what is happening (sometimes human perceptions of reality are more important that reality itself).

- Causal-loop diagrams are often created by an individual. Cognitive mapping requires the perspectives of many.

Sometimes being able to accurate construct a dynamic model of a system is not enough. A good example of this is the 5-a-day campaign. This is a common national campaign in many countries that encourages people to eat at least 5 portions of fruit and veg per day. This recommendation originated from the World Health Organisation, which suggested everyone should be consuming at least 400g of fruit or veg per day. The human body is a very refined system and consequently scientist and academics have been able to map this system using causal-loop diagram techniques. However, these techniques would reveal that the human biological system demands more than 400g. In fact some studies show that doubling the daily consumption of fruit and veg (to 800g) significantly increases protection against ALL forms of mortality. The problem is that everybody views the world differently, and have developed different perspectives on life and different beliefs on what they value most. It seems national governments tend to understand this phenomenon well. In many Western countries the average consumption of fruit and veg is 3 portions. Expecting society to increase their portion intake to 10 pieces would have been an unrealistic expectation. Campaigning for 5 portions (and average increase of 2) is viewed more realistic and consequently effective. Causal-loop diagramming would not have necessarily revealed this strategy however, cognitive mapping would have certainty uncovered the complexity of this problem much better. A rigorous and thorough cognitive mapping exercise would likely reveal the extent people value the necessity to each fruit and veg.

A final point...

Some messy problems simply have no clear, calculable solution. Logical approaches do not necessarily help in these situations. Anyone who may have tried using causal-loop diagram to model such instances will quickly discover how complexity is so hard to map accurately. Therefore intuition comes to the rescue. Cognitive maps can better model a group's opinion, perspectives and feelings in situations where no factual solutions are not available.

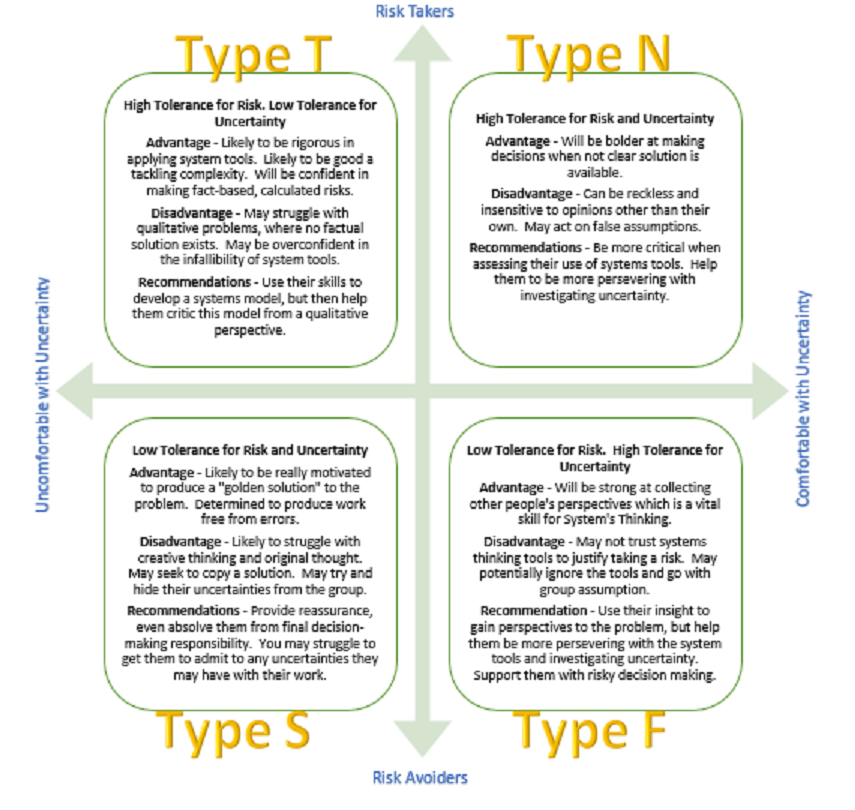

Research suggests that Myers-Briggs Type Indicators affect how we differ in our approach to strategic thinking and use of systems thinking tools. I've devised a grid matrix which attempts to plot MBTI personality type against their possible preferences for tackling strategic problems. I've also provide possible recommendations for helping support different temperaments in their efforts as thinking strategically.

NB. Please don't anyone be offended. I constructed this model in a 2hr spurt of creativity, and can't vouch for it's academic rigor.

All strategic problems contain a level risk and uncertainty to overcome. Risk and uncertainty can produce anxiety in those faced with finding a solution. I believe people develop coping strategies for managing this anxiety at a young age, which over time affects how they prefer to tackle strategic thinking later in life. I further believe the underlying drivers behind these coping strategies are based on their intrinsic tolerance levels to risk and uncertainty. Anticipating someone’s tolerance for risk and uncertainty may assist managers in better understand how someone is likely to approach strategic thinking. The below grid matrix contains my assumptions on the human biases which may exist when applying systems tools. I have also attempted to assign the MBTI to each quadrant of this matrix. MBTI Temperaments contain two MBTI, and this matrix allows for two traits to be circled.

The Concept of "System"

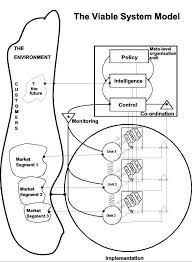

It is not too uncommon to think of a system as individual parts that are involved in dynamic interactions. VSM thinking requires that you step away from thinking about the constitute parts and how they react with one another, toward a focus on the process and the purpose of that process. The system boundaries are thus drawn around the process and not the parts of the organisation.

Surely a lion in a zoo is the same as a lion in its natural habitat? Not necessarily. Yes, the object (in this case a lion) is technically identical, but system is not. The system of having a lion in a zoo has a purpose to attract visitors, whereas the system of having a lion in its natural habitat has the purpose of being a predator at the top of a food-chain. Thinking about systems in this way, highlights how the individual parts of the system is not all that relevant. The process, and the purpose of the process, is more pertinent when trying to build a viable system model.

The Concept of "Variety"

In VSM thinking the concept of variety also plays an important role and needs to be viewed in a specific way. Take a classroom environment. The class will consist students that posses a variety of different backgrounds, home situations, prior knowledge, motivations etc. The role of the teacher is to somehow teach the lesson in a manner that reduces the variety of approaches to one that can be broadly viable to the variety of different learning requirements of the students present. This type of thinking about variety has important implications for the VSM. Firstly, the complexity of a system is now viewed on the number of different possible variations. Secondly, the Law of Requisite Variety (Ashby), states that the variety of different options in a system must be equal or less than the variety of options available to the regulating system to which it belongs to. If the higher order system does not contain sufficient options to adapt to all the possible variations it needs to regulate, then the entire system is not suitable to meet all extremes. Therefore there are only two strategic options. Either the variety of the regulating system is increased, or the variety of the sub-system is decreased. So going back the classroom example, - either the school increases the number of available classes so that the same lesson can be taught in different ways, or the school streamlines the students to reduced variety of learning needs.

"Any viable system contains and is contained in a viable system."

Stafford Beer devised the Viable System Model, based on his theory that a system is only viable by virtue of its sub-system themselves being viable.

Overview of the Model

The model consists of 5 sub-systems and an environment.

- Operations - the set of activities the organisation which provides value to the environment.

- Coordination - the set of protocols that coordinate operations so that different operations do not cause problems for each other.

- Delivery - the management activities associated with allocating resources for the operations.

- Development - the management activities associated with understanding the environment and future trends.

- Policy - the balancing activities to ensure the organisation works as a system, especially balancing the decision-making between the two Delivery and Development systems.

The two most critical tensions in the VSM are:

- the tensions between the autonomy of the parts versus the cohesion of the whole.

- the tensions between the current and future needs.

Two fundamental concepts in VSM are:

- Wholeness - Attributes the systems has as a whole which the sub-systems do not have as components.

- Emergence - Attributes that emerge as necessary to manage immediate risks/opportunities in the environment.

Too much autonomy and no cohesion and the system's 'wholeness' is lost. Too much cohesion and no autonomy and emergent attributes fail to capitalise on the environmental risks and opportunities that immediately occur.

Using the VSM as a diagnostic tool, involves assembling key features into the perfect ideal situation. This 'ideal' is then compared to the perceived reality of the current VSM structure. The differences that are noticed guide action to move the perceived situation towards the ideal.

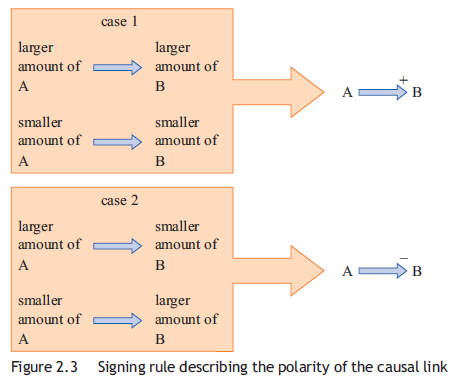

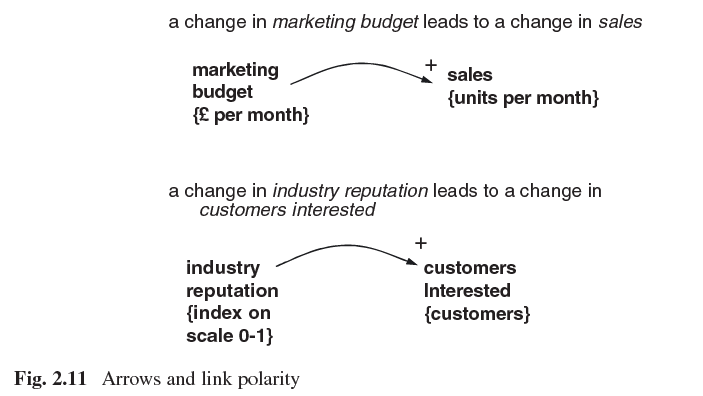

Picking and Naming Variables

The choice of words is vital. Each variable must be a noun. Avoid the use of verbs or directional adjectives. For any variable, always have in mind a specific unit of measure so that the variable can be quantified.

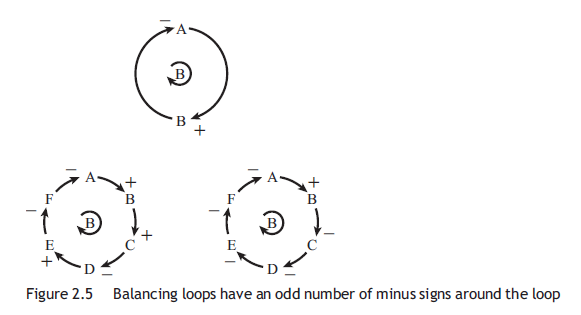

Basic Tips

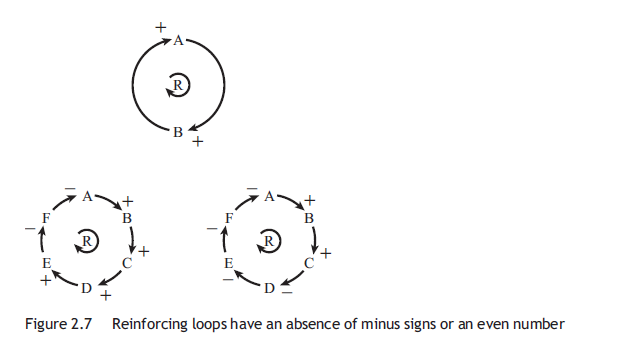

identify loop types using R or B to signify reinforcing or balancing. Always circle the R or B with a small curve travelling in the same direction as the feedback loop itself.

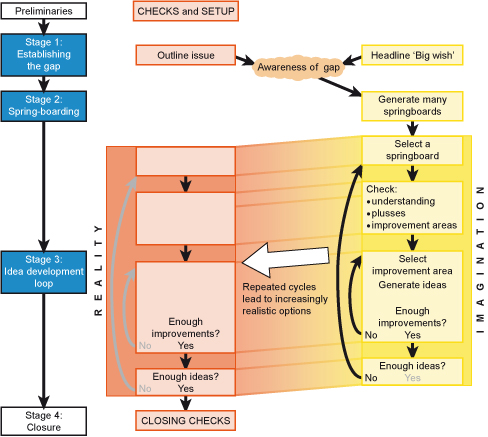

Useful Images

One why to identify the effects of a system is to recognise how situations are described. A rich array of metaphors exist in common English.

- "it only made things worse"

- "no matter how hard I try"

- "no good deed goes unpunished"

- "it came back to bite me"

- "the fix only made things worse"

- "a stitch in time saves nine"

- "it's quicker in the long-run"

- "plan early, plan twice"

We've all heard these expressions before, however their real value is not in providing advice for an immediate choice of action, but rather they offer clues to the underlying problem that exists within the system. For example the above list might be better interpreted as....

- "we've just uncovered an unintended consequence in our system"

- "even when working at full performance, this problem cannot be addressed by one person working hard."

- "every time we do the job properly, it has never cause a problem, maybe this is the standard we need to achieve every time?"

- "we cannot cut-corners in this part of the process, as this has a great impact on the system."

- "we've just uncovered an unintended consequence in our system"

- "early intervention at this stage of the process improves our system"

- "the entire system requires that we invest more time to this stage of the process, otherwise there's a knock-on effect afterwards"

- "we are not getting accurate information early enough from the rest of the system, to justify planning this early"

Jay Forrester

The primary advantage of computer models over mental models lies in the way a computer model can reliably determine the future dynamic consequences of how the assumptions within the model interact with one another. A secondary advantage of computer models over mental models is that interrelated assumptions are made explicit. Unclear and hidden assumptions are exposed by the mathematically programs and thus causes hidden assumptions to be debated and examined.

"Because all models are wrong, we reject the notion that models can be validated in the dictionary definition sense of 'establishing truthfulness', instead focusing on creating models that are useful... we argue that focussing on the process of modelling rather than on the results of any particular model speeds learning and leads to better models, better policies, and a greater chance of implementation and system improvement."

Enter any troubled company and speak with its employees and one will generally find people perceive reasonably correctly their immediate environments. They can tell you what problems they face, and can produce rational solutions to their problems. Usually the problems are blamed on outside forces, but a dynamic analysis often shows how the internal policies are causing the troubles. In fact a downward spiral can develop in which the presumed solutions make the difficulties even worse.

Donella Meadows

- Get The Beat. Before you disturb the system in any way, watch how it behaves. Ask people who have been around the system a long time. If possible graph actual data from the system.

- Listen to The Wisdom of The System. Aid and encourage the forces and structures that help the system run itself. Remember the current system has often evolved naturally, so seek the value in the current system and re-enforce it's most successful practices.

- Expose Your Mental Models to The Open Air. Everything you know, hypothesis or assume can explain is nothing more than a mental model. Get your model out there and invite others to shoot it down. Consider all other models plausible until you find evidence to prove the contrary.

- Stay Humble. Stay a Learner. It is just as important to trust your intuition as it is to trust your rationality. Lean on both approaches equally.

- Locate Responsibility in The System. Look for ways where the system creates its own behaviours. Sometimes outside events can be controlled, but sometimes they can't. Sometimes blames or trying to control outside influences only blinds one to the easier task of increasing responsibility within the system.

- Make Feedback Policies for Feedback Systems. A dynamic self-adjusting system cannot be governed by a static, unbending policy. Design policies that change depending on the state of the system.

- Honour and Protect Information. A decision-maker can't respond to information he or she doesn't have, or react correctly to inaccurate information.

- Pay Attention to What is Important, Not Just What is Quantifiable. Our culture is often obsessed with numbers, and consequently what gets measured becomes more important than what we can't measure. Decide what is more important quantity or quality and ensure the most important is discussed and spoken about.

- Go for The Good of The Whole. Don't maximise parts of the systems while ignoring the whole. Aim to enhance the entire system.

- Expand Time Horizons. The official time horizon extends beyond the payback period of the current investment, or the next election, or even the next generation. When you walk you must pay attention to obstacles at your feet, just as much as you pay attention to the obstacles in the distance.

- Expand The Boundary of Caring. No systems is separated from the world itself. Real systems are interconnected and so caring beyond the immediate boundaries are necessary.

- Expand Thought Horizons. Seeing systems as a whole requires perspectives from many different disciplines. All involved must be in learning model to solve the problem together.

Peter Senge

A learning organisation is one where "people continually expand their capacity to create the results they truly desire." The five disciplines of a learning organisation are Systems Thinking, Personal Mastery, Mental Models, Shared Vision and Team Learning.

- Today's problems come from yesterday's 'solutions'

- The harder you push, the harder the system pushes back

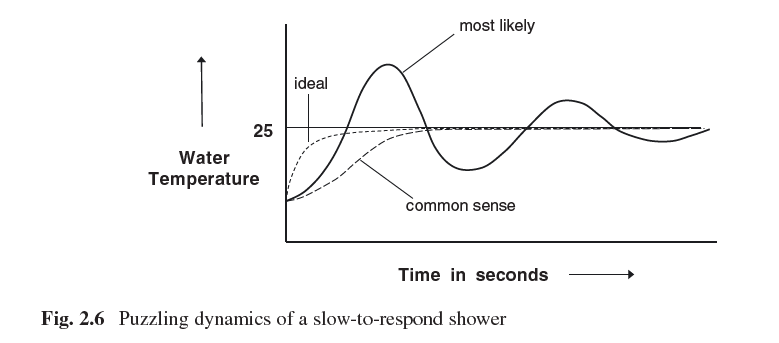

- Behaviour grows better before it grows worse

- The easy way out usually leads back in Temptation for individuals to adopt the easiest solution, tend to creep back.

- The cure can be worse than the disease Passing the burden onto the inventor to fix, when ultimately the underlining problem remains unfixed.

- Faster is slower Virtually all systems are have intrinsically optimal rates of growth, which are far less than the fastest growth possible. Excessive growth can result in the wide system seeking to compensate by slowing it down.

- Cause and effect are not closely related in time and space

- Small changes can produce big results - but the areas of highest leverage - are often the least obvious

- You can have your cake and eat it too - but not at once Many current dilemmas are by-products of static thinking. They only exist in the current situation. Often both goals can be achieved if you are willing to wait for one while focused on the other.

- Dividing an elephant in half does not produce two small elephants A system boundary must include the most important issues at hand.

- There is no blame We tend to blame others 'someone else'. The cure lies in your relationship with your 'enemy'.

Today I discovered work by the environmental scientist and activist Donella Meadows. She contributed to the construction of a System Dynamic model called World3, which attempted to computer simulate human population growth. I found a link to an version of the World3 model online (although I can't verify its authenticity) https://insightmaker.com/insight/1954/The-World3-Model-A-Detailed-World-Forecaster

As far as I can tell, Donella Meadows was convinced the population of the world will outgrow the world's capacity to support life within the next 100 years. She wrote a book called Limits to Growth which sold over 10 million copies. I've just purchased this book, because I'm fascinated with what she has to say. She devoted the rest of her life to living in a completely sustainable "closed-loop".

However, I just can't subscribe to apocalyptic views and dooms-day theories. Humankind has always lived with fear and dread of their own survival. Jay Forrester (1997) is quoted in saying "that Systems Dynamics demonstrates how most of our own decision-making policies are the cause of the problems that we usually blame on others." Surely this is at the very natural of what it is meant to be "human." When man designed a bicycle, he also created the problem of it not being fast. When man put a engine in the bicycle, he also created the problem of it being too fast that people died. The human solution was not to stop riding motorbikes and settle for slow bicycles! No, the solution was design faster bikes and better crash helmets.

Humans create solutions, which create new problems, which result in solutions to new problems. Surely this is how evolution is meant to work. I've just purchased a second book call Why e=mc2, and Why Does It Matter? I'm convinced that Einstein's theory of relativity is a missing link that debunks sustainability theories. Nature exists in cycles of creation, then destruction, but there still exists a "constant" in the equation. Surely that "constant" is alternatively called growth, evolution or progress?

This blog might contain posts that are only visible to logged-in users, or where only logged-in users can comment. If you have an account on the system, please log in for full access.

Total visits to this blog: 17661