image source: Jisc Learning analytics going live

image source: Jisc Learning analytics going live

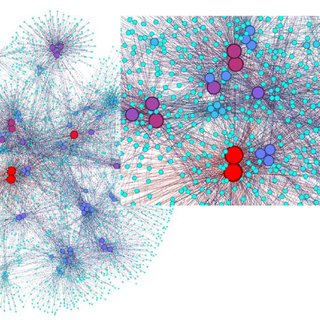

We were asked to review this article in order to expand our understanding of social learning analytics. Rather than examining interactions by students in an online learning environment such as an institutional LMS, however, or another trail of start-up, demographic, disciplinary, course, log in, data, commonly analyzed, we focussed on research network analytics of some of the leaders in the field. A very different subject to what we had been looking at previously. Another usual thing was the way we approached the reading. We begin by looking at the abstract of this paper: Dawson et al. (2014), 'Current state and future trends: a citation network analysis of the learning analytics field' and noted the aims of the paper. That was the end of the conventional approach to reading, as we then were asked to skip to the fourth section of the paper that listed its practical implications (Section 4.3). These were that the analysis ...

provides an understanding of how key papers, thematics, and authors influencing a field emerge

raises awareness about the structure and attributes of knowledge in a discipline and the development of curriculum in the growing number of academic programs that include learning analytics as a topic

promotes under-represented groups and research methods to the learning analytics community

fosters the development of empirical work and decreased reliance on founding, overview and conceptual papers

improves connections to sister organizations such as the International Educational Data Mining Society

(Dawson et al 2014, 238)

The interesting thing about the paper was its crossover between an example of learning analytics and a paper about learning analytics.

Some of its figures and tables thankfully were understandable even for a non-expert. Table 1 identifies the ten most-cited papers in the field. Interestingly, the numbers of citations in the learning analytics literature ranged between 10 and 16, whilst the Google Scholar citation counts vastly differed. This can be attributed to the equal currency placed on both old and new publications among specialist members of the field compared with researchers from a much wider range of fields and interests among the Google scholar audience.

In sum, the article is a reminder of how much more complex the learning analytics landscape is than a means to improve teaching and learning. In this case, it was used to aid in a complex understanding of how research gains prominence. A systemic and integrated response is required for the approach to do justice to its subject. As the authors note: 'while it is helpful to note that (more active) students...perform better than their less active peers, this information is not suitable for developing a focused response to poor-performing students. (p. 231)'

A more in-depth reading of the article would certainly have made the basis of that point much clearer to the reader. However, what I did gain from reading a paper in this way was an impression I could take with me to other readings and to my general knowledge of the breadth of the learning analytics field.

Dawson, S., Gašević, D., Siemens, G. and Joksimovic, S. (2014) Current state and future trends: A citation network analysis of the learning analytics field. In Proceedings of the fourth international conference on learning analytics and knowledge (pp. 231-240) [Online]. Available at: file:///C:/Users/robin/Desktop/Current%20State%20and%20Future%20Trends%20A%20Citation%20Network%20Analysis%20of%20the%20Learning%20Analytics%20Field.pdf (Accessed July 20 2020).

image source: jisc.ac.uk

My last two blogs try to summarise my views and knowledge of ways Big data are used in learning and teaching (where it can be referred to as educational learning analytics) and ethical practices related to it.

The following tries to highlights the relatedness but also the differences between learning analytics (LA) that is learner/teacher focussed and when it is more informs the wider body of educators such as managers, administrators, the institution, government, other funding bodies. Also, the reasons why LA emerged in the 2000s.

Reasons for the emergence

The US has also seen a sharp improvement in school results over the same period and it could in part be put down to the increase and improvement in learning analytics initiatives and theory.

Used to benefit educators

Institutions are of course very interested in student performance because it reflects on the institution and its popularity and on their funding and staff jobs. They are trying to reach certain external and internal key indicators. For those reasons, they are also interested in things that are not about the individual, rather, they are interested in increasing student numbers, administrative, and academic productivity and cost-cutting for profitability and data related to this, a focus is known as academic analytics. They are interested in general account analytics, which allows them to see what students, teachers are doing within the account. Activity by date allows the admin to view student participation in Assignments, Modules, Discussions, and teachers' completion of Grades, Files, Collaborations, Announcements, Groups, Conferences, etc In general one can view how the users are interacting with the courses in the term. This means the content can be adapted to improve efficiency, productivity, and adherence to policy and practice based on deductions and predictions made from the use of content.

Used to benefit students

I categorize all the above (under benefitting the educator) as also benefitting the learner. The above would reach the learner via tutorials the teacher has with them and reports if any. However, the analytics that the student is likely to actually access mainly include only their grades and records of their assignments. Students can use these to track and assess their progress, this way they can see where they need to improve as they go along. Teachers should use educational data mining (EDM) - 'analysis of logs of student-computer interaction' (Ferguson, 2012) to improve learning and teaching. Romero and Ventura (2007 cited in Ferguson, 2012), identified the goal of EDM as ‘turning learners into effective better learners’ by evaluating the learning process, preferably alongside and in collaboration with learners. These data are often only available if the teacher makes them viewable.

In my situation, the LMS used is compatible with various apps such as Turnitin (a plagiarism checker), students also have access to its analytics, used at the drafting phase of the writing course, and at the end of the course only if students query their report writing score and plagiarism has some relevance.

Challenges

Some of the educational challenges in the environment that I work in include adapting to online as opposed to f2f teaching. A way to sum up the challenge is it is completely different because the contact and communication have technology running through it. One of the challenges involved in implementing learning analytics is mistrust of how data will be used. Students I work with sometimes avoid leaving digital traces for fear of it ending up being a means of covertly 'assessing' them. This is why their focus is on final tests, where they are fully aware of what performance data will be collected and how it will be used. 'Therefore, it is necessary to make the goals of the LA initiative transparent, clarifying exactly what is going to happen with the information and explicitly' (Leitner et al. 2019, p.5). Researchers also point to privacy and ethical issues.

Recommendations

1. Teachers should get training in the use of data analytics for use in the classroom

2. There should be an open dialogue about what learners' rights to their own learning analytics should be; what learning analytics should be available to them; how to give them access (including training in accessing their own data)

3. Analytics is too much management centred - data-mining and academic analytics and its often not shared with teachers. Learning analytics need to be much more classroom and teacher/student relationship centred.

I think the adoption of these recommendations could improve engagement, ownership, motivation to learn better, and also improve learning directly.

Cameron, K. and Smart, J., (1998) Maintaining effectiveness amid downsizing and decline in institutions of higher education. Research in Higher Education, 39(1), pp.65-86.Cameron, K. and Smart, J., 1998. Maintaining effectiveness amid downsizing and decline in institutions of higher education. Research in Higher Education, 39(1), pp.65-86.

This blog might contain posts that are only visible to logged-in users, or where only logged-in users can comment. If you have an account on the system, please log in for full access.