A couple of weeks ago I was asked to prepare a short contribution about group work to a TM253 module team meeting; TM253 is a new second level computing module that is being developed. When reflecting on this, I’ve realised that I’ve experienced online group work from a number of different perspectives: as a student, a tutor and a module chair.

What follows are some rough notes that summarise my experiences, followed by a really simple conceptual framework that relates to online academic group work. From a perspective of a student, the framework might be useful tool to understand what happens in your module. From the perspective of a module team member, if might be useful to understand how to think about group work.

Student experience: A335 Literature in transition

For one of the A335 TMAs, students had to contribute to a collaborative Wiki. We had to find some academic articles that related to some of our set texts, and share a summary of what we find, a couple of useful quotes, and a reference. In turn, we would get some marks for our trouble.

We would then use what was submitted within a longer essay. I really liked this activity, since the students ended up with quite a detailed bibliography that we could also refer to later if we ever needed to. It also focussed our attention to look at the texts that were not the focus of our substantive essay.

Tutor experience: M364 Interaction Design

I tutored on M364 Interaction Design for ten years, starting in 2006. When I started, we all used a product called FirstClass, which was eventually replaced by a version of the Moodle VLE. One of the TMAs focused on evaluation. Students had to take a sketch of a design that they produced in a design TMA and share it with a fellow student. In turn, they would carry out what is called a heuristic evaluation, and suggest enhancements.

One of my duties as a tutor was to pair students together into sub-forums where they would share sketches and evaluation results. These areas had weird names (apparently names are friendlier than numbers) but I can’t remember what any of them were called. On the occasions where I had an odd number of students I would put them into groups of three. When students didn’t submit their sketches, I would share a sketch that had been prepared by the module team.

The whole reason for doing this was to enable students to gain a little bit of experience of collaboration. There was also the point that different evaluators can find different things. It kind of worked, but it was always a bit clunky, and it always took a bit of explaining.

Module chair experience: TM354 Software Engineering

Software engineering is a team sport. Software engineers use all kinds of tools to communicate with each other. They use formal diagrams, sketches on whiteboard, post-it notes, requirements documents, and a myriad of other representations. With this in mind, it would be remiss of us not to attempt to share an experience of team working.

One of the processes that TM354 talks about is agile. Agile development teams are small teams that work together to solve specific problems. Members of agile teams are constantly talking to each other. Talking makes software real. Sharing of sketches and diagrams makes software real, and this is what happens in TM354.

In a couple of TMAs students create sketches and then share them to an online tool called ShareSpace. Fellow students are then invited to make helpful constructive comments about the sketches that have been submitted. In turn, students then go onto refine their earlier diagrams, reflecting on what changes they might have made. The big idea is to simulate some of the work that can happen within module teams.

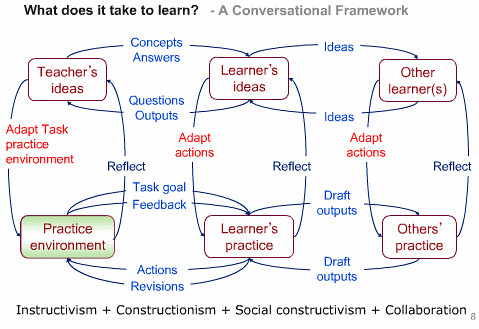

Conceptual framework

Here’s what I’ve come up with: a simple framework.

Groups vs Teams

There’s an important difference between groups and teams. A group is a group of people that can make contributions that individually may contribute to solving a defined problem, but these are separate from each other. A team is a group of people who solve a defined problem whilst also knowing something about each member’s interests, abilities, knowledge and skills. A team works together closely with each other. A team needs time to form.

More group work takes place than teamwork.

Authentic vs Artificial

The key question here is: does the assessment activity directly reflect the skills that a module aims to develop, or does it reflect what a student may be doing after they graduate? An interesting authentic assessment scenario I have heard that relates to software engineering are scenarios where requirements change part way through an assessment; a theoretical customer may discover a new set of requirements they had never through of before. This notion speaks to a sub-dimension: what is expected vs the possibility of the unexpected. In the real world, new situations can emerge, and things can go wrong.

As suggested, an artificial assessment is one that aims to test learning outcomes in a way that may be distinct from how those learning outcomes may be applied in real situations. Ideally, assessments should be authentic, but when students have a lot to study, assessments are typically artificial, but with authentic elements.

Real tools vs Simplified tools

This follows on from the earlier dimension. Should a module make use of tools that are used ‘out there in the world’, which might be potentially difficult to understand and work with, or should a module team use tools that are designed to help understanding?

In computing, a good example of this is introducing students to a fully-fledged integrated development environment (IDE), such as Microsoft Visual Studio Code, or an IDE that has been specifically developed to help students understand concepts. An example of a useful but restricted tool is BlueJ. Put another way, do we need to provide guard rails?

This topic has been subject to academic debate. My own view is to go immediately with industrial strength tools, if it is able to do so. Academics should be able to offer practical guidance to show how these tools are used.

Interaction matters vs Outcome matters

This pair reflects a design aim from the module team and those writing the assessment. What is the overall objective of the assessment? Is skills and knowledge represented by the interaction or the process, or the product at the end. A related question is whether students should reflect on the actions undertaken by the team, or the effectiveness of the final outcome?

The process is always important, whether it is writing an essay, or writing software.

Actions for points vs Completion for points

This dimension follows on directly from the previous dimension and relates to the question of what is done to gain credit for an assignment. Does completing tasks, and showing you have completed tasks gain credit, or should we assign marks for the completed artifact? In some ways, this is a bit like the idea of ‘showing your marking’ within a maths assignment.

Looking at this practically, there should be points gained for completing (and compiling) evidence of tasks.

Individual scores vs Team scores

If there is a large team supported by a small number of high performing individuals, how should the marks be allocated? Should the overall result reflect the outcome, or should it reflect the individual contributions? The answer to this may well relate to what is being assessed.

Ultimately, there should be some actions and work that enable the contributions by individual students to be differentiated between each other.

Tutor oversight vs Team autonomy

This relates to the amount of scaffolding a tutor should provide, and the extent of the guidance provided whilst teamwork (or groupwork) is taking place. Within this, there is the implicit question of whether a tutor has a ‘plan b’ just in case something goes wrong. This is also connected to the extent to which the module team provides pre-selected tools, guidance or frameworks.

It takes time to observe what occurs within a team, and it takes time (and experience) to productively intervene if things get difficult. Given that tutors often do not have a lot of time, the responsibility for setting everything up and structuring activities should fall to the module team.

Repeated scenarios vs New scenarios

This dimension relates to an issue that the module team needs to resolve. Should they adjust an existing scenario for every module presentation, or should they endeavour to create a new scenario. The risk of creating a new scenario is that it runs the risk of introducing problems (which could, of course, be authentic – but not necessarily related to learning outcomes that need to be assessed).

What typically happens (in my experience) is that a scenario framework is created, and changes are made within that framework.

Reflections

Collaborative work is a term that covers both group work and teamwork. It is a topic that is featured within descriptions of degree level qualifications that are provided by the QAA. Given the nature of higher education, it is difficult to create collaborative assessments that are intrinsically authentic. Perhaps the best we can do is to create assessments that employ and use authentic tools. When considering teamwork, it is also important to necessarily consider safety in terms of the integrity of the assessment process, and the emotional and physical safety of those who participate. Guard rails are important.