As a part of my involvement with three different software engineering modules, I’ve recently spent a few days looking at something called Git and GitHub. There are a couple of reasons to do this. The first is to get up to speed with current software engineering technologies and tools. The second reason is to try to get a bit of inspiration for ideas for a software engineering project that use the Java programming language.

What follows is a set of notes that I have made whilst having a good look around. (I expect I will be updating this post from time to time as I find out more.) It also shares some useful resources that I have collated, along with some links that I may wish to return to later. I have written it in the style that I might write module materials.

Before looking at some repositories, you will look at the basics (this is the style adopted for module materials), and then look at some useful (and related) resources.

The basics

Git is as a version control system (VCS). It is an essential software engineering tool that helps you to keep your software safe and secure, whilst also enabling you to collaborate with other software engineers. Your fellow collaborators might be in the same building, or might located in an entirely different country.

A key idea is that Git can help to save you from yourself. If you make a mistake whilst creating your software, it gives you the ability to return to an earlier version.

An important point is that Git and GitHub is not the same thing. You use the Git software to store your files in a repository. Your repository can be held in GitHub. Here are links to these two key elements:

Through Git, you can access the software files held in a GitHub repository in different ways. You can use what is known as a command line, or you can use a graphical user interface (GUI). You might also access the files through an Integrated Development Environment (IDE).

When learning to use Git, it is recommended that you use what is known as a command line. Think of the command line as a clear and direct way to give instructions (commands) to a computer. Professionals often use command lines, since they are more powerful and more expressive than using a limited set of instructions that can be given through a user interface.

If Git has been installed in your computing platform, you will be able to issue Git instructions through a command line that is available through a terminal window. The idea of a ‘terminal’ dates back to the early days of computing, when users would use the facilities of a larger (and much more expensive) mainframe computer through a ‘dumb terminal’, which is, essentially, a screen and a keyboard without and accompanying computing capabilities. A terminal window is, simply, a way to issue instructions (input) and to receive confirmation that they have been carried out (output).

Some videos

Whilst having a look at all this, I have discovered some useful videos. Here are three that I have found most useful:

Out of all these, I prefer the ‘for dummies’ tutorial. A useful activity is to make a note of the commands that were used in this video.

Some teaching materials

I’ve been made aware of some useful teaching tools and materials. We were made aware of GitHub Classroom during a recent school seminar. There’s a lot to this, and I’ve not spent any time looking at it, but it strikes me as a really powerful tool.

A really useful (and practical) resource is the Git tutorial that is provided by Software Carpentry, which “develops and teaches workshops on the fundamental programming skills needed to conduct research”. It aims “to provide researchers high-quality, domain-specific training covering all aspects of research software engineering”. Since computing is a tool for carrying out research, it offers a useful Git tutorial for novices.

Following on from the earlier comment about Git commands being issued through a terminal window, there is also something called a ‘shell’. A shell is a command line runs within a terminal window, and used to give instructions to a computer. The term comes from the idea that it is a ‘thin cover’ around your computer’s operating system. Some operating system can have different ‘shells’, and some of them can have unusual names, like Bash. More information about what shells are, and how they can be harnessed is available through the Software Carpentry The Unix Shell for novices resource.

Some books and articles

A really useful book to look at is:

Ponuthorai, P.K. and Loeliger, J. (2022) Version Control with Git. 3rd edn. O’Reilly Media.

This book is available in the OU library through the O’Reilly Safari bookshelf.

Appendix A, History of Git, is particularly useful and summarised a history that I was never aware of. It begins as follows: “No cautious, creative person starts a project nowadays without a backup strategy. Because data is ephemeral and can be lost easily—through an errant code change or a catastrophic disk crash, say—it is wise to maintain a living archive of all work.” It goes to highlight its significance and use within teams: “For text and code projects, the backup strategy typically includes version control, or tracking and managing revisions. Each developer can make several revisions per day, and the ever-increasing corpus serves simultaneously as repository, project narrative, communication medium, and team and product management tool.”

The history of Git is introduced through the following paragraph: “Git, a particularly powerful, flexible, and low-overhead version control tool that makes collaborative development a pleasure, was invented by Linus Torvalds to support the development of the Linux kernel, but it has since proven valuable to a wide range of project.” The appendix presents a brief summary of some influential predecessors.

On the topic of predecessors, a colleague shared the following article, which should be available to all students:

Ruparelia, N, B.(2010) The history of version control. SIGSOFT Software Engineering Notes, Vol 35, No 1, pp.5–9. Available at: https://doi.org/10.1145/1668862.1668876.

On the surface of it, it is quite a dry and difficult read, especially if you are unfamiliar with the background or terminology.

The paper presents a ‘brief taxonomy of version control’. Rather than being a set of categories, this section is a set important terms and concepts, some of which you may recognised from the earlier video. It also mentions something called IT Service Management (ITSM), which is a topic that is discussed in a later module.

It is worth saying something about the notion of Open Source Software (OSS), since this is a key element of the ‘historical perspective’ discussion. OSS is software where the source code, the lines of code that collectively create the software, is available for other people to use and modify. OSS can, of course, be contrasted with proprietary software, which cannot be viewed or changed by others.

The article summarises to story of development and refinement of VCS’s. You don’t need to worry about how versions of software are saved in a repository. The evolution of the approaches have been driven be developments in computing, such as increases in processing power, storage capacity, and the increases in network connectivity. The most important narrative in this story relates to the open source version control systems, rather than the commercial (proprietary) equivalents.

The article, along with the appendix in the O’Reilly text suggest the emergence of a tension between a proprietary version control system, and the open source community that support the development of the Linux operating system. The article states ‘not being allowed to see metadata and compare past versions was a major drawback of the community version of BitKeeper, and one that specifically inconvenienced most Linux Kernal developers’ (Ruparelia, 2010, p.7). Metadata is, of course, data about data. One of the advantages of version control systems is that you can add metadata, in the form of explanatory comments, when you make a change to your software. Metadata enables you to keep track of what has changed, and why those changes have been made.

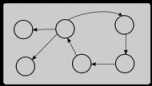

The open source section of Ruparelia’s article ends by highlighting the beginning of the development of Git. It is also useful to look at the ‘version control’ in the future section, which emphasises something called a DVCS, a distributed version control system: ‘DVCS is becoming increasingly popular in the open source community and, over time, will replace centralized system’ (Ruparelia, 2010, p.8). With Git being a DVCS, and becoming the dominant tool, this prediction has become true.

Ruparelia also suggests that a VCS will ‘become increasingly integrated with a) the entire software life-cycle, from requirements capture to defect tracking, and b) the broader configuration and change management tools and processes as defined by ITSM frameworks such as ITIL’ (ibid., p.8). The point here is that it is important to keep track of a variety of digital artefacts within the software development lifecycle: requirements, code and tests. Also, software engineering processes must necessarily adapt and change. To keep track of what is changing, it is useful apply under version management.

GitHub has become the world’s largest source code repository, with tens of millions of public projects. This concentration of code has had an interesting effect. Source code has become training data for generative artificial intelligence software, which has led to the development of an ‘AI accelerator’ known as GitHub Copilot. This is, of course, not without concerns about ethics, privacy and security. There are also legitimate concerns about how much energy AI tools consume, which is a current topic of research.

To complement Ruparelia’s article and the earlier video resources, you might find this summary article useful:

Munezero, P. (2024) Top Ten Git Commands for Systems Engineering Digital Artifacts Authors and Analysts, IEEE International Systems Conference (SysCon), Montreal, QC, Canada, pp. 1-3, Available at: https://doi.org/10.1109/SysCon61195.2024.10553438.

Look at the list of commands you have noted down from the videos you have watched. Are there any differences?

Exploring GitHub

I spent about a day looking through GitHub to get some ideas about Java projects.

I quickly noticed a couple of things. The first is that some projects have been given ‘stars’, which I am assume relate to their popularity. I soon decided to look through pages of projects until I got through to ‘zero stars’. Secondly, different projects use different software licences.

Software licences is a topic all of its own, and I’m no expert. Two importance licences that I’m aware of are the GNU General Public ‘copy left’ licence, and the BSD licence. With the GNU Licence, you can take existing software and code that is published under the GNU licence, modify it, use it, and distribute it (along with its source code) also under the GNU licence. A significant restriction is that you are not allowed to create proprietary software; software that is sold for a profit. A BSD licence (which is similar to the MIT and Apache licence) is different, in the sense that you can create software that can be sold. The point here is that if you look at code, you need to also keep in mind its licence.

My starting point was a site called CodeTriage which highlights Java projects that you can contribute to. It was all a bit overwhelming, but Book Project piqued my attention. From here I decided to look at GitHub topics. Plus, this software was a web based project. Perhaps there were Java projects that ran a simpler desktop application?

Topics

Here are some topics that I had a browse through. Do note that the numbers that are shared are correct at the time of writing. By way of comparison, I’ve also provided a link to Python topics:

Turning to the idea of a standalone Java application, I clicked through the following topics. Swing is the name of a cross-platform user interface library:

- Swing-gui: 3363 public repositories.

- Java-swing: 1680 public repositories.

- Java-swing-application: a mere 153 public repositories.

- Java-swing-gui: only 113 public repositories.

Whilst browsing through these repositories, I have noticed some other interesting topics (or tags):

- Group-project: 1010 repositories.

- Java-project: 412

- Netbeans-project: 354

- Java-mini-project: 13

I'm assuming that this list of projects are do not include those that are hosted through GitHub Classroom.

Browsing through the repositories

Whilst browsing through the repositories, mainly looking at standalone Java desktop projects, I started to notice some patterns and themes amongst repositories.

At the time of writing, TM354 Software Engineering makes use of a case study, a hotel booking system. Interestingly, there are quite a lot of them. Here are three examples. One of my tasks will be to have a look at these (and other examples) in a bit more detail.

- HotelManagementSystem: 15 stars, MIT licence.

- hostel-management-system: 2 stars, MIT licence.

- Hotel-Reservation: 1 star, no licence.

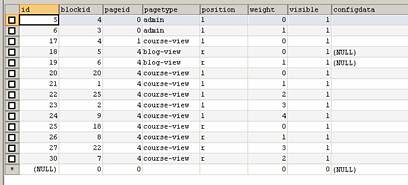

TM354 also contains some examples of other systems, such as Library management systems, which are described using the UML modelling language. I found seven different examples of these, written as standalone Java projects, which also made use of a SQL database. Here is a notable example:

- Library-Management-System-JAVA: 459 stars, MIT licence.

I also found different types of management applications. I found an inventory management system, restaurant management application, a drink ordering tool, a dental surgery application, a school administration system, a gym management tool, a laboratory management tool, and a school administration system.

I also found good number of games that run as Java Swing applications, such as Connect 4, tic-tac-toe, Sudoku, Othello, Battleships, Minesweeper, Tetris, and Space Invaders. There were also some study tools, such as software to create flash cards and quizzes (which reminds me of a project that is applied in TM112 Introduction to Computing and Information Technology 2).

It is notable that a lot of these GitHub projects are also clearly computing projects for software development or software engineering classes. This takes me to look at a topic that I mentioned earlier: group projects (over 1000 public repositories).

Group Projects

A range of different languages and technologies are used with group-project topic repositories. Without digging too far, it is possible to see repeated patterns. There seems to be management systems of various flavours, games and tools.

Agile tools projects

Reflecting on the idea of tools or utilities takes me to look at another category: agile software development tools. Narrowing this further, I remembered the idea of a Kanban board, an ‘information radiator’ that is used to share project status to agile teams. Since software engineering can be carried out at a distance, Kanban boards have moved from the physical to the virtual. Without really looking very hard, I found six different examples of Java-based Kanban board projects.

Here are three of them:

- Kanban-board: 189 stars, no licence.

- Joindesk: 78 stars, MIT licence.

- ScrumTool: 4 stars, MIT licence.

Project ideas

I really like the idea of a project that relates to the process of software engineering, which is why I went looking for Kanban board projects. Maybe there could be other projects that relate to requirements gathering (requirements engineering), or perhaps even testing. It would be interesting to look in GitHub to see what I can find. I have also noticed that there were quite a few ‘quiz and test’ projects, or projects that relate to flashcards.

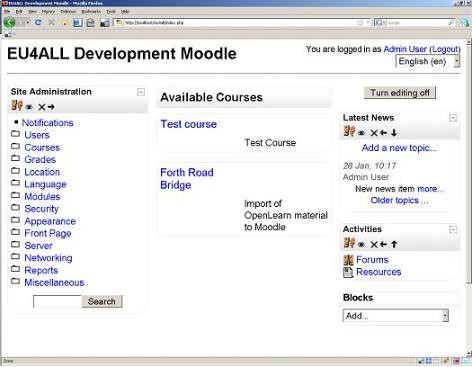

All this has led to a collision of ideas. Over ten years ago, the university initiated a project that was known as SocialLearn, ‘a cross-University project to combine the best of social web technologies with those of online social learning’. It was a grand idea, but having a quick read of a paper that was published at the time suggests that it was way too broad and ill defined. Technology was prioritised over pedagogy, and what was described wasn’t awfully clear. Clear requirements are essential.

Here's my idea: a social quizzing application. One student can challenge another student with questions that relate to their study of a particular module. These questions might be from a ‘question’ library, or they might be ‘one off’ questions written by themselves. Rather than having answers marked by computers, they are ‘assessed’ by fellow students. The quality of questions can be graded. Requirements can be added. Also, an ‘evil genius’, who works in the module team could post conflicting requirements which everyone has to figure out. How to dealing with security is also going to be a necessity.

Another thought is some kind of ‘study buddy’ tool, which might be a combination of a note taking tool and a study schedule planner. When working as software engineer, I kept a notebook, which was invaluable. Project logs are emphasised in the project module.

A final thought is some kind of simple ecommerce solution, such as a shop front (which reflects some of the projects, such as the inventory management systems that I’ve uncovered during my browse of GitHub). Admittedly, this isn’t very exciting, but it might well depend on what services are offered or sold. It might be for a community café or museum; scenarios that have been used in TM354.

Maybe this is all too complicated. Maybe the answer is a hotel booking system.

Further resources

Before sharing some final thoughts, and having found so many examples of projects within GitHub, it is worth mentioning the following article that also reflects the seminar that I mentioned at the start of this post:

Tu, Y.-C. et al. (2022) GitHub in the Classroom: Lessons Learnt, in J. Sheard and P. Denny (eds) Proceedings of the 24th Australasian Computing Education Conference. New York, NY, USA: ACM, pp. 163–172. Available at: https://doi.org/10.1145/3511861.3511879.

Reflections

The first ever version control system I was exposed to was CVS. A system administrator I worked with used it to keep track of text files that described user permissions. It wasn’t too long before I understood its usefulness. The grumpy director of the research institute where I worked asked: ‘when did you add all those users?’ A quick look at the change log gave exact dates when the change was made. A comment also indicated why the changes were made. The reply was: ‘I added them, because you asked for them to be added two years ago’.

When I changed jobs, I started to use Microsoft Visual Source Safe (VSS), which is mentioned in the Ruparelia article. Again, I could see its usefulness. We could add labels to entire code bases, enabling us to retrieve all the source code that was a part of a very specific release of software. We could roll back if we needed to. It was a tool that we could use to protect ourselves, from ourselves. VSS has since been discontinued. Microsoft now uses Git.

There is always more things to do. I’ve installed Git. I’ve made a note of all of the commands. Although I’m more familiar with Java, I need to know more about Python, and how it can be used to manipulate XML files and help with audio processing. I’m then going to create a Git repository.

Acknowledgements

Many thanks to Tamara Lopez for kindly sharing some of the resources and articles that are mentioned in this blog.