In February 2018 I went to a 'Teaching programming across STEM' workshop that was organised by my colleague Michel Wermelinger. The aim of the workshop was to get different colleagues from different parts of the STEM faculty together to share experiences about how they teach programming, raise awareness of each other’s plans, discuss different types of provision, and to share experience and examples.

What follows is a rough summary of the notes that I took during the day, which were augmented by having a quick look at some of the slides that were prepared for the workshop (OU staff link). The aim of these notes are to help me remember what happened, and to provide a future reference for anyone who might be interested in the teaching and learning of programming at the OU. Since there was a 6 month gap between the event and the writing of the blog, I’m sure I’ve forgot some important elements and aspects, but I hope they are both pretty accurate and useful.

Introduction

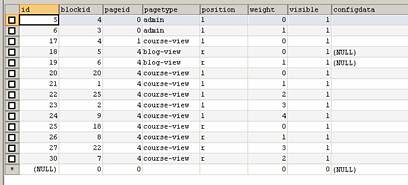

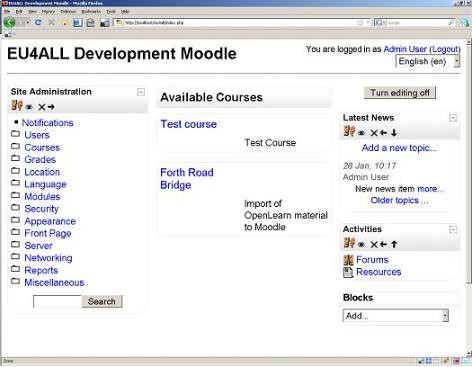

The event was introduced by Michel, who said that the day was split into two parts, a morning ‘supply side’ section (which included a series of talks), and an afternoon ‘the demand side’ section, which included networking and workshop discussions. Michel kicked off the event by talking about OpenLearn materials that contain programming.

OpenLearn materials

OpenLearning is an Open University website that offers free online short courses for anyone who might be interested. It is sometimes used to share excerpts of real OU modules but it also contains self-contained short courses. If you have an interest in an academic subject, the chances are that there will be an OpenLearn course that might tell you a little bit about it. It is, perhaps, not much of a surprise that there are OpenLearn resources about programming.

Simple Coding

Michel introduce us to something called an ‘hour of code’ introduction to programming using Python 2, also known as Simple Coding (OpenLearn). Simple coding introduces students to the fundamental concepts of variables, expressions, loops, if, lists, and function calls. It contains one problem throughout: keeping and maintaining a restaurant bill.

I made a note that this was a part of the BBC Make It Digital season. To complement this, Michel has written a short blog post about Trinkets. Finally, students are also encouraged to share their code on social media.

Learn to Code for Data Analysis

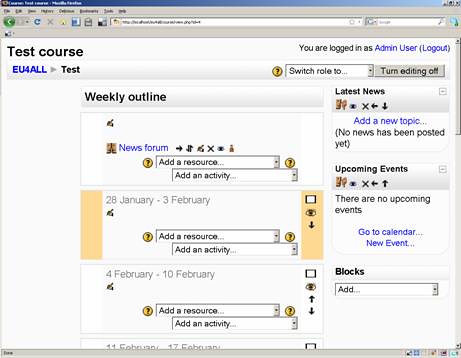

Another OpenLearn resource is called Learn to Code for Data Analysis (OpenLearn). This course started life as a 4-week 20 to 30 hour Futurelearn MOOC. It makes use of Python 3, function definitions and loops. It also makes use of the R-like Pandas library which is used for data analysis. It also uses (I’m copying from my notes here) Jupyter notebooks with Anaconda or cocalc.com.

The courses applies something called First Principles of Instruction and adopts a problem-driven approach, where students are given a weekly project to clean data, merge data and manipulate data. Students are asked to manipulate authentic real open data from organisations such as the World Health Organisation and the UN.

TM112 Introduction to Computing and IT 2

TM112 Introduction to Computing and IT 2 was introduced by Paul Piwek, module chair. Paul explained that TM112 builds on TM111 and prepares students for level 2 study where students go onto study M250 (which uses Java) and M269 (which makes use of Python), before making their way to TM351 (which is mentioned later).

The module has three themes: essential information technologies, problem solving with Python, and information technologies in the wild. There are 3 spiral bound books, so students can put them down next to their computer, and practice typing in code.

Students will be using turtle graphics with Python 3, Baby Pandas (a library that is used for data processing and analysis), Jupyter notebooks and an editor like IDLE. The module places particular emphasis on the teaching of problem solving skills and the construction of algorithms. Students are given programming practice and assessment by using data from the Office of National Statistics.

There are also formative quizzes with CodeRunner, which are marked for engagement to help students build mental models of what happens at an abstract level when programs are run.

SM123 Physics and Space

Jimena Gorfinkiel introduced SM123 Physics and Space which is studied after students have completed S111 Questions in Science.

Students are given 4 weeks of Python 2 programming that is based on the science they are learning. Currently, there are no other programming at level 2 and level 3 physics or astronomy pathways. The aim is to help students get a feel for programming and data analysis is all about. There is no expectation of developing specific competencies, but the aim is to help students understand principles of algorithm design.

The module design is built on ideas from other introductory materials, i.e.it makes use of Trinket (trinket.io) and the teaching approach is to scaffold the student’s learning by providing activities and examples.

TM129 RobotLab

Jon Rosewell introduced TM129 Technologies in practice, a module that has three different 10 point bits: a section on programming, a section on networking, and a section about the Linux operating system.

The programming bit has a simulator for a small Lego robot which is called RobotLab and robotics is a used as a way to introduce students to programming and to provide a useful context. It introduces basic control structures but doesn’t introduce students to data structures. Students are asked to run and watch the running of code, adapt code, and complete an open challenge.

Like Scratch, RobotLab is a drag and drop environment, but the environment can also create text programs which students see when they are expose to Python code. A comment I noted was that practical labs are important: ‘If you have simulation, and you do it well, there are opportunities for learning’.

An issue with the approach is that RobotLab is not a recognised language and is now showing its age. Support for RobotLab will finally end with the February 2019 presentation of TM129.

M250 Object-Oriented Java Programming

Anton Dil introduced M250 Object-Oriented Java Programming. In some cases, students study M250 in parallel to M269, which will be described in the next section.

M250 uses Java and adopts an ‘objects first’ approach. Students are introduced to key object-oriented (and Java) concepts, such as protocols and attributes, classes, inheritance, composition, interfaces, access levels and the catching and throwing of errors. Other topics include collections, file input and output. There are also optional sections on design by contract and assertions.

Students use a range of different tools, such as the Java Development Kit (JDK) 7 and a graphical object-interaction environment, called BlueJ which enables students to manipulate objects and visualise relationships between classes. Some of the teaching makes good use of examples, such as illustrating methods using bank accounts, demonstrating classes by creating unexpected types of frogs, and demonstrating a marionette that is made from simple shapes.

Like other OU modules, Coderunner is used for interactive computer marked assessments. An important part of the assessment is, of course, through a series of TMAs that have increasing weighting. Looking towards the future, a future assessment principle may be to have less reading and more writing code and to encourage the social dimension in programming. On this point I made a note to myself about whether the concept of pair programming might be something that could be introduced; doing it virtually and at a distance may provide some interesting but unique challenges.

M269 Algorithms, data structures, computability

Michel Wermelinger introduced M269 Algorithms, data structures, computability, a module that gets to the heart of computer science. It introduces students to data structures, queues, how searches work, sets, binary trees, hash tables, graphs, generic techniques, approximation, complexity, big O notation, heuristics, and genetic algorithms. Needless to say, it’s also all about programming.

The tools used in M269 includes Python 3, Komodo edit, and Coderunner is used for all the TMA questions. For students who haven’t come up through TM112, it contains a Python crash course in week 2.

Given its challenging subject matter, M269 is a marmite course; some students respond well to the challenges it presents, whereas others offer more robust opinions. From a personal perspective, I remember studying a similar module when I was an undergraduate in the 1990s. I found it a challenging subject, but I later appreciated its importance and value when I became a professional software developer.

Open University Summer of Code

Neil Smith introduced an initiative called the Advent of code. Advent of Code is described as: “a series of small programming puzzles for a variety of skill levels. They are self-contained and are just as appropriate for an expert who wants to stay sharp as they are for a beginner who is just learning to code. Each puzzle calls upon different skills and has two parts that build on a theme.” Neil also told us about that there is something called the Google Summer of Code, which students can apply to.

Computing and Communications students are invited to take place in a voluntary programming challenge called the Summer of Code that is designed to give students programming practice. Students are sent a two part problem, every Monday to Friday for two weeks. All in all, there will be ten problems. An interesting observation is that if students do 2, they will invariably do all 10. Another observation was that some students were passing programming assessments but not being able to solve these problems; perhaps practice is the key and problem solving can and should be taught explicitly.

TM351 Data management and analysis

Alistair Willis introduced TM351 Data Management and Analysis. M250 and M269 are prerequisites for TM351. TM351 isn’t a programming module as such, but it does expect programming competence that is commensurate with level 3 student. The module explores the data lifecycle: the acquisition, preparation, analysis and presentation of data. Python is used for acquiring and cleaning data, and databases are used for storage. The module also demonstrates simple machine learning, statistical analysis and graph plotting.

TM351 uses Python 3, PostgreSQL, MongoDB, Pandas, Mathplotlib and Jupyter notebooks. A point that I clearly noted was that students needed to learn how to use a library and not just a language.

Like M269, it is also a ‘marmite module’ and offers students with some particular challenges. It requires students to combine different techniques together to form solutions. In some cases students don’t have adequate coding skills and may also lack critical skills so they can apply the right techniques.

An interesting point I noted was that the Python requirements for TM351 are less than what is required for A-level. Another comment I note down was: perhaps more needs to be done to help students to prepare for this module, or the preparation needs to be done differently. In some respects, this is where TM112 Introduction to computing and information technology 2 will play an important role.

Python programming in S818

Andrew Norton and Mark Jones introduced S818 Space science which is a 60 point module that forms Stage 1 of the MSc in Space Science and Technology (F77). The module presents an introduction to Space Science and Technology, Apollo 11, Gaia and Rosetta probes, and the Curiosity Mars rover.

S818 is linked to the OpenSTEM lab. The programming that is carried out as a part of the module is linked to the physics that is applied; Python is used as a tool to work through data. Students are directed to “Learn to Code for Data analysis” on OpenLearn, that was previously mentioned by Michel.

During Weeks 1 to 6, students are exposed to Jupyter notebooks and Pandas. Examples include a section on space weather and looking at data from space weather satellites. In addition to these activities, students are asked to carry out straight line fitting to data (SciPy, matplotlib), plot data of increasing complexity (using matplotlib) and a numerical solution of Kepler’s equation in orbital dynamics (I’m not sure what this means). Students are also expected to use Python to handle and present results, even when they aren’t explicitly asked to do so.

Python and accompanying tools

Tony Hirst from the School of Computing and Communications gave a talk about the different tools and technologies that could be used with Python. One thing Tony did was to explain that Jupyter is an ecosystem of related bits, based on Python. One of those bits is known as iPython.

Echoing earlier presentations, Tony emphasised the importance of libraries and packages. There were packages that could be used to define and simulate circuits. There were packages that related to chemistry, where users could type in the name of a compound and software would ask the web for the structure. There were packages about astronomy and also packages about music, which could work with musical representations and create playable midi files.

We were told about V-REP a Virtual robot experimentation platform, and Binder, a way to connect Jupyter notebooks to GitHub version control software.

I made a note that Tony had also been looking at running software on OpenStack, which is an important part of TM352 Web Mobile and Cloud technologies.

The demand side

After a break for lunch, it was onto a series of short 2 minute presentations by ‘various artists’ that were broadly entitled ‘the demand side’ for the simple reason: these may be modules or module that need to apply programming in some way.

SXPA288 Practical science: physics and astronomy

Sheona Urquhart spoke about second level physics and astonomy module, SXPA288 Practical science: physics and astronomy. I made a note of some interesting words: “the thing that freaks them out is the terminal window” and “this is not a programming course … Excel is just grim”. I’m assuming that this comment is linked to the need to perform data analysis.

T312 Electronics

T312 Electronics, which was introduced by Jane Bromley, is a new module that has just started production. I noted down that there might be an opportunity to draw on the Python electronics libraries that Tony had mentioned, and Python might also be used for hands on experience of signal processing.

M346 Linear statistical modelling

This module was introduced by Karen Vines, and is currently going through a rewrite. The earlier version used to use some software called Genstat (if I’ve made a note of this correctly), but there is a plan to move to the R programming language (wikipedia) which was said to be ‘command line’. The emphasis on this module is said to be the statistical techniques rather than the software

M373 Optimization

Optimisation was introduced by Tim Lowe. The module is all about numerical computing techniques, where ‘students use commands written by module team which implement methods’. I’ve made a note that this is a module that is needed to support a new data sciences degree.

Physical Sciences Level 3

Ulrich Kolb introduced the BSc in Physics and mentioned that students needed programming skills. Students are required to carry out some simple Python coding and carry out simple tasks for data analysis. Modules are split into 10-15 credit chunks, and these could be linked to programming.

Delivering programming tutorials

This bit of the workshop was delivered by yours truly, where I spoke from the perspective of a staff tutor. I introduced a popular model called TPAC, which categories different types of knowledge in a simple way: there is pedagogical knowledge, technical knowledge about how to use tool, and knowledge about the content or the subject that is taught. I also mentioned that tools such as screen sharing could be really useful in the teaching of programming. I can’t quite remember, but I must have also spoken about the university group tuition policy.

PG Bioinformatics and cheminformatics

The final presentation of the day was by Mark Hirst who briefly spoke about the requirements bioinformatics and cheminformatics modules. There was a need to develop data handling, data analysis and data mining skills. Perhaps where was also an opportunity to use data from genome databases and a subject that could be called ‘advanced coding for the biosciences’.

Discussion notes

The event ended with a wide ranging discussion. One theme was about whether there was the need to explicitly teach different programming paradigms and the subject of comparative programming languages (I have to confess that I might have raised this as a subject, since it was one of my favourite subjects as an undergraduate, and one that I have found really helpful as a professional programmer). Another point being it is important to acknowledge important tensions between the needs of education and the needs of training.

There were differences: one colleague insisted that we could all use C++, another said that we should use FORTRAN, and a further colleague suggested that Pascal should be used for the simple reason that strongly typed language encourages good programmer behaviours. This wide range of opinions suggested that there isn’t one language that can suit our needs.

One interesting point was that our students are, of course, changing. There is now a new computing curriculum for schools, which is something that everyone needs to be aware of.

I also noted down the words: ‘the pedagogy of teaching computing across students is something that is common across school, and this is something that can be learnt from each other’. I made another note was about the broad subject of the teaching of programming and how students move from a novice to an expert, namely that expertise is something that you acquire by doing, and this is a point that links back to my own practical presentation about the importance of delivering programming teaching.

Some concluding questions were: ‘how do we teach programming in a cost effective way?’ and ‘should we set up a working group to co-ordinate the teaching of programming?’ A further point is that associate lecturer development is important, and as is collaboration between different development communities.

Reflections

I learnt a lot from this event and I got thinking about different ways of doing things. Not only did I learn about virtual robots that might be used in modules like TM129, I started to wonder about the possibility of teaching through robotic kits (The Pi Hut). I also learnt about the importance of R, and emphasised the flexibility and richness of libraries.

When I worked in industry, I did some serious coding in C, C++, Visual Basic and have even enjoyed confusing myself with the very many ways to write the same expressions in Perl, but I have yet to seriously get my hands dirty with Python. Thanks to all the presentations that were made during the day, I came away feeling inspired; I felt that I now need to do more to update my programming and development skills.

Acknowledgements

The words shared in this blog ultimately come from each of the presenters. A big shout out to Michel Wermelinger who did a brilliant job putting this event together.