Session: Putting the history of computing into different contexts

The voice of the meachine: Tom Lean

Tom Lean from the British Library kicked off the second day with a presentation about a project that he is currently working on: An oral history of British Science (blog). An important part of this project is about the history of computing. A part of Tom’s role is to travel around the country to interview different people. Each interview takes between 10-15 hours in length. They are biographical; people are encouraged to talk about themselves, their environment, tools and procedures.

This lifestory approach to interviewing allows us to get a sense of the person themselves, their mannerisms and how they sound. It allows us to have a more direct connection with the subject and those people who played a part in its development. The longer interviews are edited down to highlights, which will then be made available through the British Library History of Science project website. I understand that researchers will be able to gain access to the entire interviews.

Tom gave us a taste of the interviews by showing us a clip of Ray Bird taking about the HEC1 computer (YouTube). (For the interested, there’s also an Oral History of British Science YouTube channel). The second clip was an interview of Mary Lee Berners-Lee (Wikipedia) who spoke about ‘what’s fun about programming’.

All in all, a great talk and a great initiative. As an aside, I remember discovering another archive of oral histories of computing (University of Minnesota), which have been collected by the Babbage Institute. Different interviews by different people (and institutions) are likely to explore and expose different issues. Both archives are invaluable to present and future researchers.

Telling the long and beautiful (hi)story of automation: Marie d’Udekem-Gevers

Marie took us on a tour of devices that relate to the history of computing, offering us a slightly different perspective. Computing can also be understood in terms of mechanisms, mechanisation and automation, which eventually takes us towards data processing. We can also think of the history of computing in terms of generations, but there is also an important pre-history that we need to be aware of too.

When we think of the pre-history of computing we might also consider mechanical and water clocks, the development of the Jacquard Loom (Wikipedia). There is also the work of Pascal (who was mentioned earlier) and Babbage (whose trial machines are exhibited within the Science Museum). Marie introduced a simple distinction: internal versus external representations (and memory).

The difference between the two is that we can easily (and obviously) see external representations (of information), captured within cards, or as notches on a rotating wheel. Modern computers, of course, make use of hidden internal representations. The difference between internal and external connects to the notion of the immediately understandable and tangible versus the hidden and abstract nature of software. This connects to a wider (and later) debate about what we can gain by exhibiting the more recent generation of computing devices.

Competing histories of the internet: Christopher Leslie

Christopher Leslie (PPY homepage) teaches the history of the internet technology at the Polytechnic Institute of New York. During his talk, Christopher mentioned a couple of books – one that I have read, and another one that I had never heard of before. The first is called, ‘where wizards stay up late’ by Hafner and Lyon. The second was called ‘NERDS: a brief history of the internet’. (There are, of course, a number of other books about the history of the internet, such as one called ‘A brief history of the future’ by a former Open University colleague).

A couple of comments that Chris made echoed some that had been made during the previous day; that it is very easy to take a determinist view of the history of technology; that developments occur gradually and in a number of determined steps. When it comes to the history of the internet, there have been a number of different systems and innovations, emerging from different countries and locations. One interesting note that I made was the development occurs through a series of transitions, that technology is moved from one context to another.

Chris mentioned the work of Donald Davies at the National Physical Laboratory, Teddington, and an important Association of Computing Machinery conference in 1967 where two people who had never met each other presented very similar ideas. In fact, I’m read that the word ‘packet’ (as in the phrase ‘internet packet’) comes from Davies’s work, whereas the protocols that make the internet work come from the work in the US (of course, I’m impossibly simplifying a whole swathe of really important history and technical stuff here!) Chris also mentioned the French network Cyclades (Wikipedia) which has also influenced the development of ‘the internet’.

I’ve also made a note of his point that the connections between people and communities are really important. Although defence funding was necessarily important, it is the connections between people and a culture of openness that exists within an academic community that helps developments to occur. Another really important point that I’ve made a note of is that we ‘need to fight determinism in the classroom!’ I totally agree.

My ‘take away point’ from Christopher’s presentation was that things are a whole lot more complex than they really are; there isn’t one history – there are many.

Session: Games

I was initially surprised to see a session on games in this conference, but the reasons why (and the importance of its inclusion) soon became apparent. This session resonated a lot with me, since I was once an avid player of games during the ‘cassette era’! There is also an increasing awareness that is a whole history that relates to the use of computers in entertainment.

Games and gaming can also represent compelling museum exhibits; they can be potentially used to draw people in to other exhibits. This is why this session also has the subtitle ‘games – and it’s potential as a Trojan horse’.

The popular memory archive: Helen Stuckey

Helen Stuckey, who travelled all the way from Australia, talked about a project that was all about collecting and exhibiting player culture from the 1980s. I never knew this, but apparently there was quite a unique gaming culture in Australia and many games were developed locally due to import restrictions.

The popular memory archive is a web portal. Gaming isn’t just restricted to the games as artefacts; there is a wider and richer picture of use and consumption that is important too. The portal allows visitors to save or record player memories. In the 1980s games were often the first way that people came into contact with computers (this was certainly my own experience). I have my own memories of walking to a newsagent and agonising over which game to buy with my own pocket money. This walk, and the action of loading the game into my Atari computer in my cramped bedroom could be considered as a part of my biography.

Other aspects of computing history include the history of production and the role of hobbies. Helen showed us a logo of the ‘Melbourne House’ software company, which certainly remember from my teenage years. At the time, it had never occurred to me that this was an Australian company.

One of the challenges lies with choosing what artefacts and issues to focus on. Out of a potential 900 titles, 50 game titles were chosen. Some of the themes that I’ve noted include businesses, rise of the bedroom coder, legal issues, and the role of the collector.

Fan sites, such as Hall of Light (a database of Commodore Amiga games) and Word of Spectrum also have an important role to play in terms of documenting history. (I started to look into both of these sites, and quickly found hours of my life had disappeared!)

I found the idea of a web-based resource really interesting. Just as we have citizen science projects, such as Galaxy Zoo, I can see that there is scope for participative, or citizen history sites. When there are so many memories and products and experiences out there, crowdsourcing is undoubtedly a powerful approach. I’m enthusiastic about old games, and after a quick search around on the web following Helen’s presentation, I can clearly see that I’m not alone.

Introduction of computer and video games in museums: Tiia Naskali

Tiia’s presentation was about a physical exhibition rather than a virtual one. Tiia spoke about gaming from the Finnish perspective and the hobbyist era between 1980 and 1990. (On reflection, this is an incredibly short period of time in which a whole lot happened).

Connecting to some of the points that Helen mentioned, Tiia made the point that games are a part of life histories. They are important within popular culture and the work of that period can be shared and appreciated by a newer generations.

What struck me as really interesting was Tiia’s summary of different game exhibitions that had taken place across the world. One of the most prominent was Game On which apparently began at the Barbican, London.

Gaming exhibitions still will continue to have resonance today. On the month of this conference, the latest generation of games consoles are receiving a lot of attention: the Xbox One (Wikipedia) and the Playstation 4 (Wikipedia).

This session led to questions relating to the challenges regarding digital preservation, i.e. whether we should be considering how to preserve digital worlds. For those who are interested in this project, more information can be found by visiting a project website that also contains a link to a final report. Other points raised during the question and answer session related to the authenticity of gaming experience and the potential societal impact of the use of games, which is, of course, the subject of on-going research.

Session: The importance and challenges of working installations

Computer Conservation Society – Its story and experience: Roger Johnson

Roger Johnson introduced the Computer Conservation Society (society website). It wasn’t an organisation that I had heard of before, but I’m so glad that I heard about it. The society was the brain child of Doron Swade (Wikipedia), former curator of the science museum (who has written a cracking book about the trials and tribulations of building Babbage’s Difference Engine no 2).

The society is a joint venture with the Science Museum and the British Computer Society and currently has approximately 800 members. It has a number of guiding principles. Firstly, membership is open to all, and it is free. It doesn’t own computers but has, instead, close links to museums. It also has a small rescue fund. This can be used to help preserve historically significant machines that might be at risk of being disposed.

During Roger’s talk, I made a note of the phrase, ‘today is tomorrow’s history’. Given that there is so much that is going on at the moment a challenge lies with understanding what should be captured.

For those who are interested, the CCS also has its own newsletter, called Resurrection (CCS website).

Museums – what they can and should be doing : Charles Lindsey

Peter Onion, who works on the Elliott 803 (Wikipedia) at the National Museum of Computing (and probably does a whole range of other things too!) temporarily stepped in for Charles Lindsey (who was able to attend the question and answer session).

Peter, using Charles’s words spoke about the objectives of a museum. Two objectives are to inform the public and to help serious researchers. Peter argued that perhaps there is a third, which is to preserve (and to develop) the skills necessary for the maintenance and operation of the objects and to preserve the perspective of those who created them.

One really interesting (and important) point is that museums are about history, not fashion. One question was whether computing history ended in 1980? This echoed an earlier point that some modern computers can appear to be visually uninteresting; their mystery and complexity is hidden within integrated circuits. Working (historic) machines have the potential to add and expose depth and may be able to more directly expose the details that make things work. There is also the question of what stories we may tell, questions about what issues earlier engineers (and maintainers) may have faced, methods they used and tools they applied.

History, nostalgia and software: David Holdsworth

We all know that hardware without software is useless. A laptop without an operating system or application software becomes a pointless and immutable mix of plastic, glass and electronics. Software is the stuff of computing (you might almost call software its ‘oxygen’), but so much of it is lost. One of the most obvious reasons is that software is inherently invisible, and increasingly so. This raises the important question of how to go about preserving (and also potentially exhibiting) software.

David showed us an interesting couple of web pages; an implementation of the Algol-60 programming language (Wikipedia) for a KDF9 computer (Wikipedia) demonstration through a web page. Those who know something about the history of programming languages, Algol is a really important language. Think of it as a latin of programming languages; it’s not used much these days but you can see strong echoes of its design in programming languages of today, such as Java. (Being more of a software guy than a hardware guy, I felt that more might have been said about the history of languages).

The fact that we can write programs using an old language through a web page is really cool. Such an approach allows us to sample the past and get a feeling for how things used to work. David argued (or I have noted down) that we should ideally be able to browse and analyse source text, see software working and sample user experience. I agree with him.

When it comes to digital preservation, David made the point that we need to read the original media and save it to new media, to keep a byte stream and create software to manipulate and work with these byte stream. Not only is the software important, but so is the documentation too. One way to deal with the documentation challenge is to scan existing manuals. Documentation, however, can be flawed and incomplete. The best representation of how a machine worked is an emulator. A well written emulator becomes a description of how hardware operates.

On the subject of emulators and software, I asked myself a thought experiment of ‘what kind of exhibit would I create if I wanted to present something about the history of software?’ Some random thoughts include: the presentation of a command-line interface (echoing the use of a teletype), followed by the use of DEC terminals. This would then be followed with a hands-on emulation of a Xerox Alto, followed by another emulation of an Apple Lisa (perhaps even an actual machine). This could then be followed with a really early version of Windows, and then concluding with a touch screen tablet interface (running either iOS or Android). All these presentations got me thinking!

The Teenage Baby: Chris Burton

I visited the Museum of Science and Industry (MOSI website) when I was looking around Manchester before choosing to study Computer Science there as an undergraduate. Chris’s presentation has underlined that a repeat visit there is now long overdue.

Manchester Small Scale Experimental Machine (SSEM), also known as the Manchester Baby (Wiki pedia)was designed by Williams, Kilburn and Tootill and is considered to be the first stored program computer in the world. Chris gave a description of a programme to reconstruct a replica of this very first machine.

The reconstruction was completed in 1998. Chris told a fascinating story of the role the machine had played within the museum. It was a story of movement and construction, of relocation and restarting. The SSEM has now been in operation for fifteen years and it is important to remember that the original machine only ran for only three.

Chris emphasised the very important role of volunteers. A volunteer can act as a guide, introducing the different aspects of the machine to visitors. Chris told us of a story of a volunteer who held aloft a Williams tube and said, ‘this is what a flash drive looks like in 1948... and it only holds a millionth of a gigabyte’, raising curiosity and grounding the past in the technology of the present.

Physical reconstructions not only embody history, but also they represent and echo some of the processes that occurred as a part of the development of a machine. By creating the past, we can not only develop skills, but we can uncover challenges that the early designers and users faced.

Session: Reconstruction stories

Reconstruction of Konrad Zuse’s Z3 : Horst Zuse

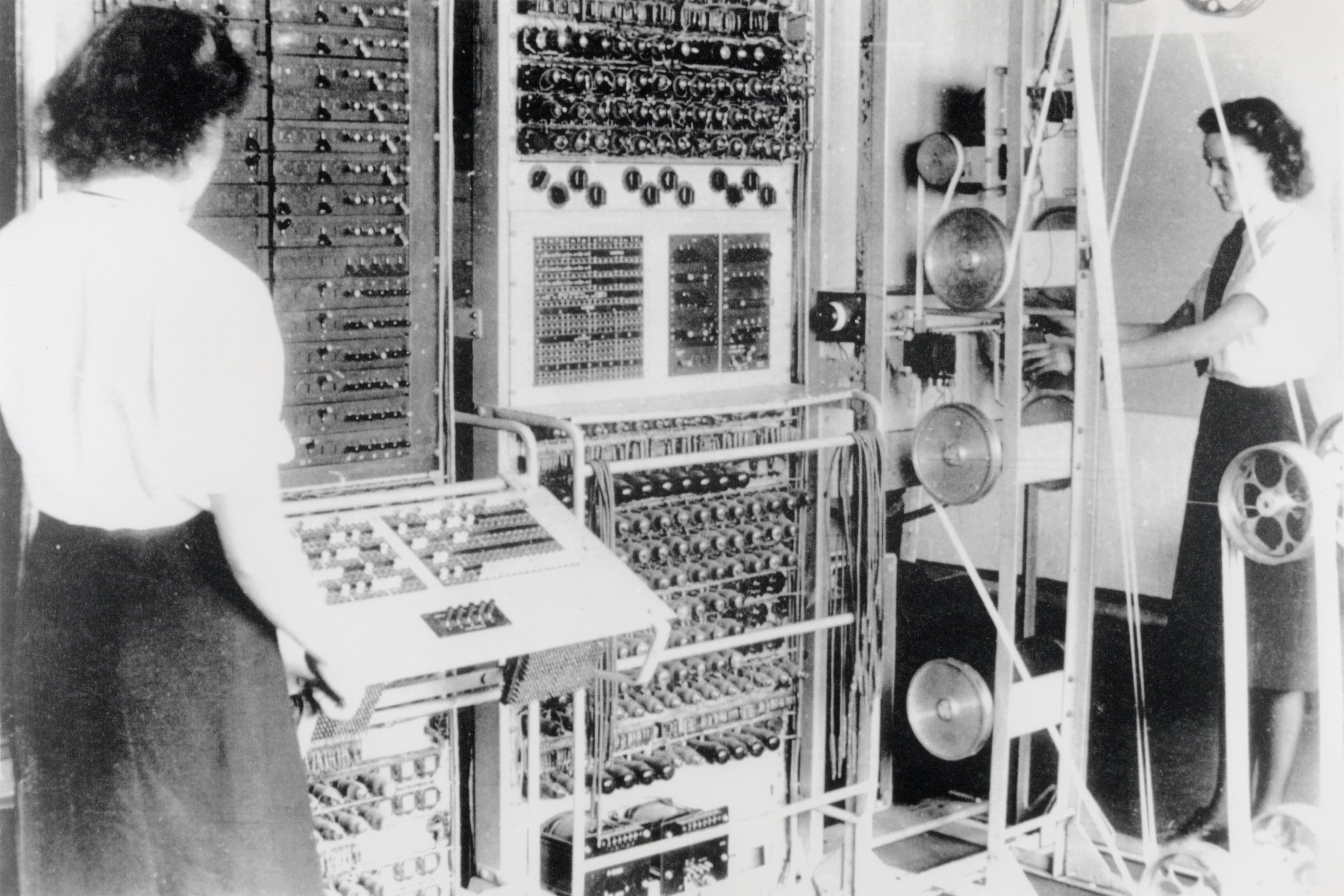

One of the truths in the history of computing is that there were a number of parallel developments happening around the world at the same time. In Britain there was the work at Bletchley Park, in the United States there was the work at University of Pennsylvania, and in Germany, there was the work of Konrad Zuse.

Horst Zuse, who made a presentation at this conference, is Konrad’s eldest son. I have known about Zuse’s work for a long time, and heard that his very early machines were destroyed in World War II. What I didn’t know was the extent of Zuse’s creativity and innovation. His early machines, the Z1, 2 and 3 used binary floating point numbers. Z3 can be considered to be one of the first functional programmable computers in the world. One of the differences between the Z3 and other early machines it made use of electromechanical relays. Z3 apparently used two and a half thousand of the them, with six hundred being used for the calculating unit.

In 2008 Horst proposed building a new version, or a reconstruction of the Z3. The new machine could be used to teach the principles of computing (addressing the same issue that the computing devices of today are more difficult to understand). This reconstruction, however, was to make use of modern telecommunication relays, but this doesn’t discount the challenge of creating such a machine.

Horst talked about the delivery of the relays, the racks in which they were housed, the construction of memory and some of the challenges regarding the input devices (if I remember correctly). It was initially located in the Technical museum, Berlin, to accompany the Z1 reconstruction that took place between 1987 and 1989. It’s final destination is likely to be the Konrad-zuse-museum in Hunfield (museum website). The museum looks like a cool place to visit!

There were two surprises in store for me. The first was that Zuse created a binary calculating engine whilst independently rediscovering some of the principles that had been previously discovered by George Boole. Secondly, during the question and answer session, a delegate asked about something called Plankalkül (Wikipedia). I had never heard of this before. In essence, Zuse proposed the design of a programming language decades before it became practically possible.

EDSAC Replica Project : David Hartley

Every ‘first’ is qualified. Zuse’s machine is considered to be the first programmable computer, the Manchester Baby could considered to be the first solid state computer, whereas EDSAC (Wikipedia) is considered to be the first computer that went into regular service with a specific intention of solving problems for its users. I didn’t know this, but EDSAC is also attributed to have helped three Nobel Prize winners.

The EDSAC reconstruction (project website) started in 2010, following a conversation with a co-founder of ARM (which designs the processors that are used in smartphones and a whole host of other devices). The project aims to have a working machine by 2015. As well as creating a machine, corollary objectives include the desire to create a new archive of related materials and resources and, importantly, to create expertise. These objects connect nicely to points that Peter Onion made when he was talking about the role of museums; that the very act of rebuilding (or preservation) actively enables past skills, tools and techniques to be rediscovered (and new approaches to be reapplied).

The machine is to be housed at the National Museum of Computing at Bletchley Park. It’s interesting that there will be two early machines with very different memory technologies: the use of a cathode ray tube, and mercury delay lines. I understand that there is a connection with the Dollis Hill research centre somewhere along the way, but I don’t (yet) fully understand the details just yet. This just underlines the point that there’s always lots more reading to do.

For those who are interested, there’s a YouTube clip about the EDSAC replica project.

The Harwell Dekatron Computer : Kevin Murrell

The Dekatron computer, or WITCH (as it is affectionately known), strikes me as a bit of an odd ball – but a very interesting one! It was designed for (or as a part of) the UK Atomic Energy Research Establishment, Harwell, Oxfordshire. Kevin told us that it was relay controlled, but it has an electronic arithmetic and logic unit (the bit that does all the calculations). It also makes use of something called Dekatron valves which serves as its memory.

After spending life at Harwell, it was then moved to Wolverhampton and Staffordshire Technical College (which then later became a university). Because of its move and role in education, it remains, perhaps the oldest original working computer in the world.

More information about this interesting machine can be found though the following YouTube video: The reboot of the Harwell Dekatron/WITCH computer. The Computer Conservation Society also have a page about the WITCH (CCS website)

Capturing, restoring and presenting IRIS : Ben Trethowan

IRIS is an abbreviation for Independent Radar Investigation System. Its role was to collect radar signals to record movements of aircraft. Should there have ever been a mid-air collision the data collected by IRIS could have been used to provide key evidence for any investigation. IRIS was said to have been built in the 1970s and ran until 2008 where it was decommissioned, which is an astonishing length of time for a single system.

Ben gave us some information about the technology. IRIS was based on a DEC PDP11 that had been heavily customised. Apparently the operating system had been customised too. When it comes to computer conservation, the march of time can have an impact. One of the challenges that Ben faced was regarding magnetic tapes. Over time, oxidisation can occur, which means that the metal layer that is used to store all the data was starting to separate from the plastic layer. An important part of IRIS was the use of high capacity data cartridges. These too had started to degrade. Rubber parts used as a part of the tape drives (or the cartridges) were beginning to perish.

As far as I can remember it, the previous owners of IRIS contacted the computer history museum and asked if they would like it. Ben then got involved with the project to move the machine to Bletchley Park, working very closely with the donor organisation. In doing so, he gained a thorough understanding of the role of the machine and the context in which it was used.

What struck me about Ben’s presentation was that he presented what amounted to a ‘good practice’ guide for computer conservation. Ben’s talk was very clear; it was very interesting to hear all about the ‘other stuff’ that technical curators or ‘machine keepers’ need to consider or take account of. Whilst a machine is interesting in its own right, understanding the context of use and the sharing of hard won expertise is invaluable in terms understanding how a machine works, its design and its broader organisational and cultural significance.

I’ve made a note (during Ben’s talk) that a good relationship with a donor organisation is important. It also struck me that good computer conservation isn’t just about dealing with the computer and its software. A computer forms a part of relationships between groups of people. As soon as a computer moves from its original context to a new one it can easily become disembodied. Understanding the human structures as well as the technical structures strikes me as a dimension that museums always need to be mindful of.

Reflections

The conference ended with a short panel session. I have to confess to being pretty mentally tired at the end of the two days and I didn't take in as much at this point as I would have liked! This said, the conference was just the right length; a third day would have been too much for me!

This part of the blog is a set of random reflections - nothing too controversial; just a set of thoughts on what struck me the themes were. I’m sure that different people would have come away with a different set of themes based on their own personal interests.

One of the key themes of the conference was (perhaps unsurprisingly) the role of museums in the history of computing. There are some fundamental challenges regarding preservation when many aspects of computing (and computer use) are intangible. There is also a question of which stories to present and how we might present them, and how to we make what is sometimes abstract become visible to try to make it understandable. One approach, of course, is to use guides or interpreters to try to inspire visitors and help them to understand abstract ideas and principles. Grounding the role of machines in terms of their application or their wider social context also strikes me as being very important too.

Reconstruction of old computers featured heavily and this was a surprise (but in retrospect, this was more due to my own unfamiliarity of what was happening in this sector than anything else). Reconstruction is a process where the actions both generates and reaffirms knowledge. It also strikes me that it is a fabulous way to go about conducting research into some of the early designs and sharing expertise.

Another theme relates to the role of history and its relevance. A number of speakers say that the history of technology or computing isn’t taught a great deal. Computer history certainly wasn’t taught on my undergraduate degree and this is a shame. I was also struck by the assertion that subjects such as computing are viewed as ‘ahistorical’. This said, you scratch the surface and there’s a whole host of rich, deep and fascinating stories.

It also was a real delight to inadvertently discover that those that had a connection with the actual history of computing were able to come along to the conference. What also struck me was a sense of community, especially amongst those who have an involvement with the Computer Conservation Society.

A final work on what I got (personally) got out of the conference. One of my research interests relates to how ‘place’ played a role in the development of computing, i.e. what happened and where. I also hope to travel to different places where these innovations have taken place. This, for me, will be a catalyst for adventure and learning. In fact, I’ve already taken a couple of journeys and hope to do many more in the coming years.

One thing that I’ve realised is that there is so much history on my doorstep. During the conference I was chatting to a former colleague who I was amazed to discover had a direct and immediate connection with a computer called LEO (Wikipedia), which was arguably the world’s first commercial computer. (There was the UNIVAC in America, but I would have to travel quite a way to visit the places where it was created). I know hardly anything about the LEO. I feel that a whole new journey of discovery is just about to begin.