I read this last in 2001; I was on the Masters in Open & Distance Learning (as it was then called).

Whilst there are hints at e-learning the closest this gets to interactive learning is the video-disc and the potential for CD-Rom. Actually, there was by then a developed and successful corporate training DVD business. In 2001 the OU sent out a box of resources at the start of the course. 16 books and a pack of floppy discs I recall to loud 'ListServe' or some such early online collaboration tool.

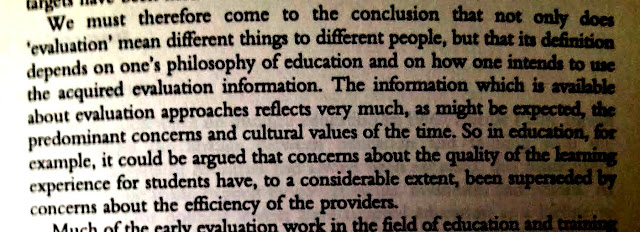

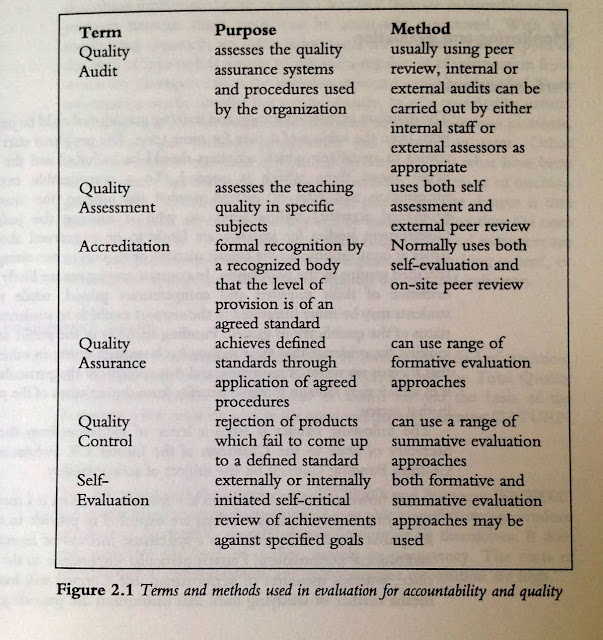

The nature of evaluation

Evaluation Stages

- Identify an area of concern

- Decide whether to proceed

- Investigate identified issues

- Analyse findings

- Interpret findings

- Disseminate findings and recommendations

- Review the response to the findings and recommendations.

- Implement agreed actions.

There's an approach to everything. When it comes to evaluation it helps to be systematic. At what point does your approach to evaluation becoming overly complex though? Once again, think of the time and effort, the resources and cost, the skill of the person undertaking the evaluation and so on. Coming from a TV background my old producer used the expression 'pay peanuts and you get monkeys': skill and experience has a price. Evaluation or assessment, of the course and of the student (in the UK), of the student in the US.

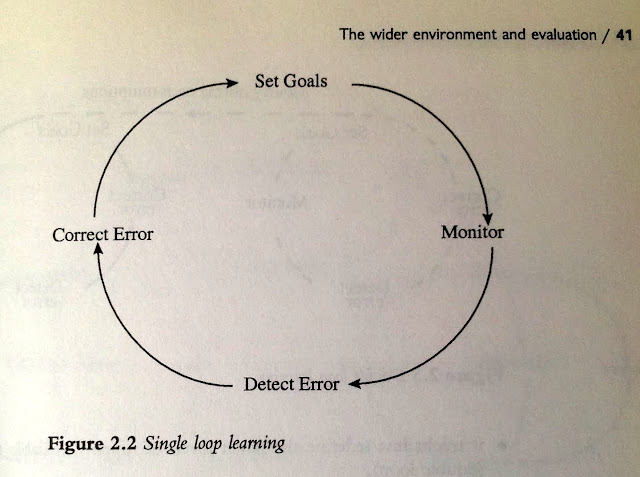

Improvement as a result of evaluation (Kogan 1989):

The idea of summative versus formative evaluation i.e. the value of the course to achieve a task vs. aspects of the course that can be addressed and revised.

Anthropological vs. 'agricultural-botanical'.

Illuminate evaluation. People are not plants. An anthropological approach is required: Observe, interview, analyse, the rationale and evolution of the programme, its operations achievements and difficulties within the 'learning milieu'. Partlett & Hamilton (1972)

CIPP (Stufflebeam et al 1971) evaluation by:

- Context

- Input

- Process

- Product

CONTEXT: Descriptive data, objectives, intended outcomes (learning objectives)

INPUT: Strategy

PROCESS: Implementation

PRODUCT: Summative evaluation (measured success or otherwise)

UTILIZATION: None, passive and active.

CONCLUSION

There are other ways to quote from this chapter:

- Handwritten and transcribed

- Reference to the page but this isn't an e-Book.

- Read out loud and transcribed for me using an iPad or iPhone

- A photo as above.

Does the ease at which we can clip and share diminish the learning experience? Where lies the value of taking notes from a teacher and carefully copying up any diagrams they do? These notes the basis for homework (an essay or test, with an end of term, end of year then end of module exam as the final test?)

GLOSSARY

REFERENCE

Daniel, J (1989) 'The worlds of open learning', in: Pained, N (ed) open Learning in Transition, London. Kogan Page.

Partlett, M and Hamilton, D (1981) 'Evaluation as illumination: a new approach to the study of innovatory programmes. Originally published as a paper 1972 for University of Edinburgh Centre for Research in the Educational Sciences. in: Partlet, M and Dearden, G (eds), Introduction to Illuminative Evaluation: Studies in Higher Education, SRHE, University of Surrey, Guilford.