I attended my first joint OU SEAD/LERO research conference, which took place between 4 July and 6 July 23. SEAD is an abbreviation for Software Engineering and Design Research Group a research group hosted within the OU’s School of Computing and Communications. The conference was joined by members of LERO, the Science Foundation Ireland Research Centre for Software, which based in Limerick.

What follows is a summary of the two days I attended. There was a third day that I didn’t attend, which was all about further developing some of the research ideas that were identified during the conference, and researcher professional development

The summary is intended for the delegates of the conference, and for anyone else who might be interested in what happens within the SEAD research group. All the impressions (and any accompanying mistakes in my note taking) are completely my own. What is summarised here isn’t an official summary. Think of it as a rough set of notes intended to capture some of the themes that were highlighted. It is also used to share some potential research directions and areas that intend to be further developed and explored.

Day 1: Introductions and research discussions

Bashar Nuseibeh kicked off the day by highlighting the broad focus of the conference: to consider the role of software in society. Although I missed the first minutes of his opening address due to traffic, there was a clear emphasis on considering important related themes, such as social justice.

The first session was an ice breaker session. This was welcome, since I was an incomer to the group, and there were many delegates who I had not met before. We were asked to prepare the answers for three questions: (1) Who you are, including where you are based and your role? (2) What is your main research area/interest?, and (3) Something you love about your research and something you dislike. (Not bureaucracy!)

Having a go to answer these myself, I work as a staff tutor. My research interests have moved and changed, depending on what role I’ve been doing. Most recently, it has been about the pedagogy of online teaching and learning. When I was a researcher on an EU funded project, I was looking at the accessibility of online learning environments and supporting students who have additional requirements. Historically, my research has been situated firmly in the area of software engineering; specifically, the psychology of computer programming, maintenance of object-oriented software, and software metrics (informed by research about human memory). I have, however, returned to the domain of software engineering, moving from the individual to communities of developers by starting to consider the role of storytelling in software engineering, working with colleagues Tamara Lopez and Georgia Losasso.

What I like about the research is that it is really interesting to discover how different disciplines can be applied to create new insights. What can be difficult is that different disciplines can sometimes use different languages.

Invited talk: navigating the divided city

Next up was an invited talk by Prof. John Dixon from the OU’s Social Psychology research group. John’s presentation was about “intergroup contact, conflict, desegregation, and re-segregation in historically divided societies”. John described how technology was used to explore human mobility preferences. Drawing on research carried out as a part of the Belfast Mobility Project. The project studies, broadly speaking, where people go when they navigate their way through spaces, and can be said to sit within an intersection between social science and geography. Technology was used by researchers to study activity space segregation and patterns of informal segregation, which can shed light on social processes.

John also highlighted tensions that a researcher must navigate, such as the tension between open science (where data ca be made available to other researchers) and the extent to which it is ethical to share detailed information about the movement of people across a city.

There was a clear link between the talk and the theme: the connection between software and society. This talk also resonated with me personally: as a regular user of an activity tracker called Strava, I was already familiar with some of the ethical concerns that were shared. After becoming a user of Strava, I changed a couple of settings to ensure that my identity is disguised. Also, a year ago I noticed that the activity tracker has started to hide the start point and the end point of any activity that I was publicly sharing. A final point from the part of the day is that both technology and software can lead to the development of new methods and approaches.

Fishbowl: Discussing society and software

Talking of new methods and approaches, John’s talk (and a lunch break) was followed by an event that was known as the ‘fishbowl session’, which introduce a ‘conference method’ that I had never heard of before.

In some respect, the ‘fishbowl’ session was a discussion with rules. Delegates sat on one of ten chairs in the middle of the room, and have a conversation with each other, whilst trying to connect together either the main theme of the discussion (software and society) or some of the topics that emerge from the discussions. We were encouraged to discuss “anything where software has a role to play”.

The fishbowl discussed consequences of technology, collective education, critical thinking (of users), power of automation, concentration of power (in corporations), the use of AI (such as large language models), trade-offs, and complex systems. On the subject of AI, one view I noted down was that perhaps the use of AI ought to be limited to low risk domains, and leave people to the critical thinking (but this presupposes that we understand all the risks). There was also a call to ensure that AI tools to explain their “reasoning”, but this also implicitly links back to points about skills and knowledge of users. This is linked to the question: how do we empower people to make decisions about the systems that they use?

Choices were also discussed. Choices by consumers, and by developers, especially in terms of what is developed, and what is good to develop. Also, when uncovering and specifying requirements, it is important to consider what the negatives might be (an observation which reminds me of the concept of ‘negative use cases’ which is highlighted in the OU’s interaction design module).

I noted down some questions that were highlighted: how do we present our discipline? Do we research how to “do software” and leave it up to industry? Should we focus on the evaluation of the impact of software on communities and society? An interesting quote was shared by Bashar, which was: “working in software research is working for society”.

A final reflection I noted was that societal problems (such as climate change) can be thought as wicked problems, where there is no right answer. Instead, there might be solutions that are not very right or wrong, or solutions that are better or worse than others.

It was difficult to distil everything down to a group of neat topics, but here are some headings that captured some of points that were discussed during the fishbowl session: resilience, care, sustainability, education, safety and security, and responsibility.

At the end of the session, all delegates were encouraged to join a group that reflected their research interests. I joined the sustainability group.

Group Work 1 - Expansion of themes from the fishbowl

After a coffee break it was time to do some work. The guidance from the agenda was to “to develop some proposals for future research (problem; research objectives; research questions; methods; impact)”.

The sustainability group comprised of four members: three from SEAD, one from LERO.

After broadly discussing the link between sustainability and software engineering, we produced a sketch of a poster that shared the following points:

- How can we make connections and causal links between different (sub)systems explicit.

- How can we engineer software to be holistically ‘resource aware’?

- What is the meta-language for sustainable software systems?

- What are the heuristics for sustainable software systems?

On the surface of it, all these points are pretty difficult to understand.

The first point relates to the link between software, economics, and society. Put another way, what needs to be done to make sure that software systems can make a positive contribution to the various dimensions of our lives. By way of further context, the notion of Doughnut Economics was shared and discussed.

The second point relates to the practice of developing software. Engineers don’t only need to consider how to develop software systems that use resources in an efficient way, they also need to consider how software teams use and consume resources.

The third point sounds confusing, but it isn’t. Put another way: how do we talk about, or describe, or even rate the efficiency, or sustainability of software systems. Going even further, could it be possible to define an ISO standard that describes what elements a sustainable software system could or should contain?

The final point also sounds arcane, but when unpacked, begins to make a bit of sense. In other words: are there rules that software engineers could or should apply when evaluating the energy use, or overall sustainability of software systems? There are, of course, some links from this topic to the topic of algorithms and data structures (which is explored in modules such as M269 Algorithms, data structures and computability) which considers efficiency in terms of time and memory. A simple practical rule might be, for example: “rather than continually polling for a check in status of something, use signals between software elements”. There is also a link to the notion of software patterns and architecture (with patterns being taught on TM354 Software Engineering).

Day 2: Ideate and prototype

The second day kicked off with summaries from the various groups. The responsibility team spoke about the role of individuals, values, and organisations. The care group highlighted motivation, engagement, older users and how to help people to develop their technical skills. The education had been discussing computing at schools, education for informed choices, critical thinking, and making sure that the right problem is addressed. The resilience group discussed support through communities, and the safety and security group asked whether safety related to people, or to process.

A paraphrased point from Bashar: “look to the literature to make sure that the questions that are being considered haven’t been answered before” also, reflecting on the earlier keynote, “consider radical methods or approaches, and consider the context when trying to understand socio-economic systems”.

Group Work 2 - ideate and prototype

Back in our groups, our task was to try to operationalise (or to translate) some of our earlier points into clearer research questions with a view to coming up with a research agenda.

Discussing each of the points, we returned to the meaning of the term sustainability, along with what is meant by resource utilisation by code, also drawing upon the UN sustainable development goals https://sdgs.un.org/goals .

We eventually arrived at a rough agenda, which I have taken the liberty of describing in a bit more detail. The first point begins from a high level. Each subsequent points moves down into deeper levels of analysis, and concludes with a point about how to proactively influence change:

- What types of software systems or products consume the most energy?

- After identifying a high energy consuming product or system, use a case study approach to holistically understand how energy used, also taking into account software development practices and processes.

- What are the current software engineering practices of developers who design, implement and build low energy computing devices, and to what extent can sharing knowledge about practice inform sustainable computing?

- What are the current attitudes, perceptions and motivations about the current generation of software engineers and developers, and how might these be systematically assessed?

- After uncovering practices and assessing attitudes, how might the university sector go about influencing organisations to enact change?

Relating to the earlier call to “draw on the literature”, a member of our team knew of some references that could be added to the reference section of our emerging research poster:

Lago, P. et al. (2015) Framing sustainability as a property of software quality. Communications of the ACM, Volume 58, Issue 10, pp.70–78. https://doi.org/10.1145/2714560

Lago, P. (2019) Architecture Design Decision Maps for Software Sustainability. 2019 IEEE/ACM 41st International Conference on Software Engineering: Software Engineering in Society (ICSE-SEIS), 25-31 May 2019, IEEE. https://doi.org/10.1109/ICSE-SEIS.2019.00015

Lago, P. et al. (2021). Designing for Sustainability: Lessons Learned from Four Industrial Projects. In: Kamilaris, A., Wohlgemuth, V., Karatzas, K., Athanasiadis, I.N. (eds) Advances and New Trends in Environmental Informatics. Progress in IS. Springer, Cham. https://doi.org/10.1007/978-3-030-61969-5_1

Manotas, I. et al. (2018) An Empirical Study of Practitioners' Perspectives on Green Software Engineering. 2016 IEEE/ACM 38th International Conference on Software Engineering (ICSE). 14-22 May 2016. https://doi.org/10.1145/2884781.2884810

Wolfram, N. et al. (2018) Sustainability in software engineering. 2017 Sustainable Internet and ICT for Sustainability (SustainIT). 06-07 December 2017. https://doi.org/10.23919/SustainIT.2017.8379798

(A confession: I added the Manotas reference when I was writing up this blog, since it looked like a pretty interesting recommendation, especially have previously been interested in the empirical studies of programmers).

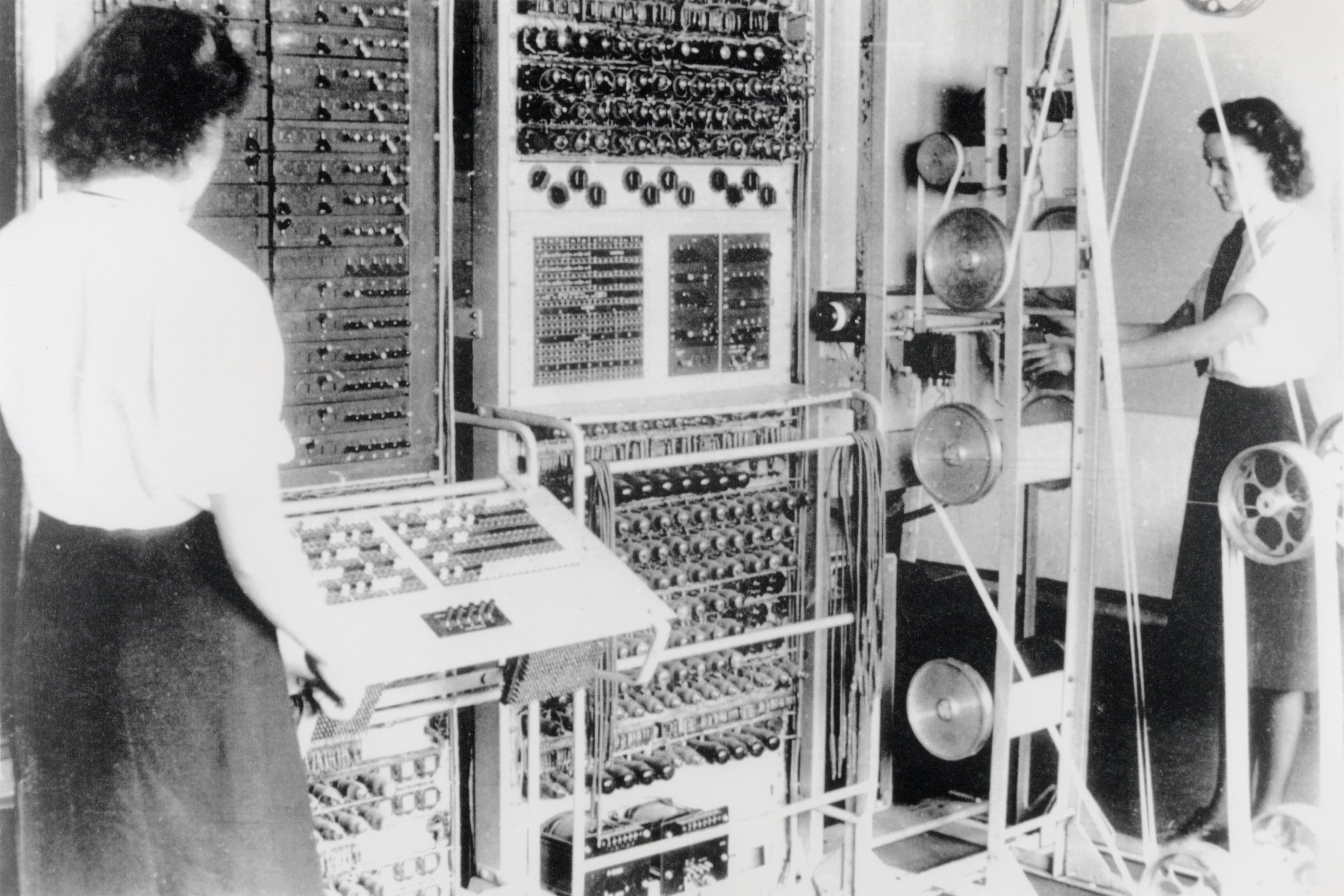

Conference visit: Bletchley Park

The second day concluded with a visit to Bletchley Park, which isn’t too far from the campus. It seemed appropriate to visit a place where socio-technical systems played such an important role. I had visited Bletchley Park a few times before (I also recommend the computing museum, which is situated on the same site), so I sloped off early to try to avoid the rush hour to London.

Day 3: Consolidate and plan next steps

This final day contained a workshop that had the title “consolidate and plan next steps” and also had a session about professional development. Unfortunately, due to my schedule, I wasn't able to attend these sessions.

Reflections

I really liked the overarching theme of the event: the connection between software and society. Whilst listening to the opening comments it struck me that there were some clear points of crossover between research carried out within the SEAD group, and the research aims of the OU Critical Information Studies research group.

It was great working with others in the sustainability group to try to develop a very rough and ready research agenda. It was also interesting to begin to discover how fellow researchers in other institutions had been thinking along similar lines and have already taken some of our ideas further.

One of my next steps is to continue with reading and exploring with an aim of developing a more thorough understanding of the research domain.

It was interesting that I was the only staff tutor at the event. It is hard for us to do research, since our time split in three different ways: academic leadership and management (of part time associate lecturers), teaching, and whatever time remains can be dedicated to research. For the next few years, my teaching ‘bit’ of time will be put towards doing my best to support TM354 Software Engineering.

Looking forward, what I’m going to try to do is to integrate different aspects of my work together: integrate the teaching bit with the research bit, with the tutor management bit. I’m also hoping (if everything goes to plan) to tutor software engineering for the first time.

As well as integrating everything together, another action is to begin to work with SEAD colleagues to attempt to put together a PhD project that relates to sustainable computing.

Update 20 July 23: After doing a couple of internet searches to find more about DevOps, I discovered a new book entitled Building Green Software (O'Reilly), which is due to be published in July 24. I also found an interview with the lead author (YouTube), and learnt about something called the Green Software Foundation. I feel really encouraged by these discoveries.